How to use VRidge with Kinect

Yesterday I’ve told you what VRidge is and wrote a review about this cool product that lets you use virtual reality PC experiences with your Cardboard mobile headset.

Today I want to focus on one of its features: the ability to use a Microsoft Kinect to emulate positional tracking of the headset: this way you can have what mobile headsets really lack, that is the positional tracking, the room-scale functionality. The system works in a very simple manner: they use the head position detected by the Kinect as the position of the headset in the virtual world, in a way very similar to what we do in our ImmotionRoom solution. But how to make it work? And is it really working well? I’ll try to answer these questions in this article.

UPDATE: I’ve written a new article that offers a more elegant way of using VRidge with Kinect using ImmotionRoom system. You can find it here. Otherwise, go on to keep reading on how to use the standard way involving opensource software like OpenTrack.

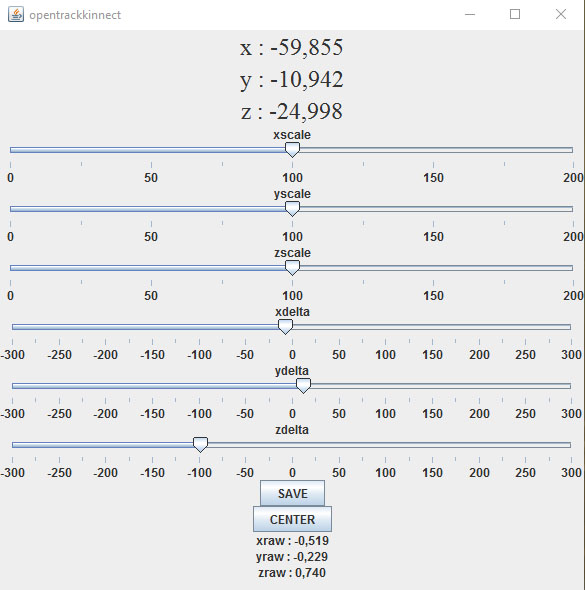

Making the Kinect v2 work with VRidge has not been that simple and that’s why a tutorial proves to be useful. First of all, you have to set up VRidge as I explained yesterday and have a Kinect connected to your PC. Then, you have to download opentrackkinect from GitHub. Opentrackkinect is a little Java application that you can use to retrieve your head position read from a Kinect and pass it to Opentrack application (more on this later). You can download the source code and build it using Eclipse (as I made it, the super-nerdy way) or you can download the release execuables from here (naaaah). Launch Opentrackkinect: you should see a very simple window with the first three numbers expressing the position of your head in a frame of reference that you can recenter at any time.

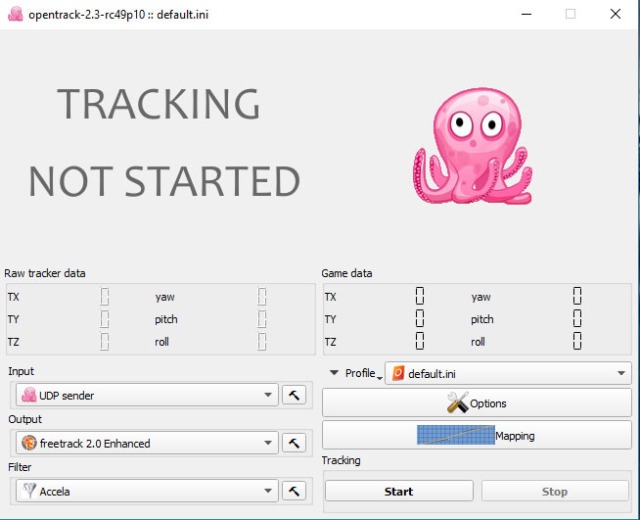

Ok, now you have to download OpenTrack. What is opentrack? It is an opensource program to read the tracking position of a point from any source and transform it in any kind of output. So, for example, you can read position from a marker seen by the camera of your PC and transform it to a mouse position. Very cool! Download Opentrack from releases page, taking care of choosing the correct version. I’m talking about correct version because at the time of writing the last version is 2.3-rc99-p11, but if you use it with opentrackkinect, it won’t work, so you have to use 2.3-rc49p10. At the time that you’ll read this article, start downloading latest release and then, if it doesn’t work, try to downgrade. After downloading it, give a look at its wiki, if you want, and then start OpenTrack.

Now we have to say to opentrack to take its input data (the tracked point position) from opentrackkinect: to do that, select as Input “UDP sender” (as in the picture). Check that settings of UDP sender are using port 4242. To make OpenTrack send data to VRidge, set as Output “freetrack 2.0 Enhanced” and that’s it. The chain should be clear: opentrackkinect reads your head position and sends it to opentrack, which sends it to VRidge to use it as positional tracking. Cool, isn’t it?

Start OpenTrack streaming pushing the Start button (You don’t say?): you should see all numbers changing while you move, with that little pink octopus moving following your head. If the little octopus doesn’t move, it can mean that you are not visible to the kinect, that your firewall is blocking the data streaming or that you’ve made something wrong.

Last step: launch VRidge application on phone and PC, than go to VRidge PC application settings and set it to use position from freetrack and orientation from headset. All done! Now if you launch a Riftcat or SteamVR experience, you’ll have positional tracking from Kinect… super-awesome! Full room scale games with a Cardboard! Oculus games are still not supported but it’s a work in progress.

But…how is this tracking? It is comparable to Oculus one? Well, absolutely not. It’s a good start, but since it uses filtered data from an external tracking sensor, it’s quite slow and imprecise. Our ImmotionRoom solution tracking is better, but we all suffer from same problem: Vive dedicated laser tracking is ultra fast and precise, while Kinect one has to be improved. The problem that we have really solved is that using our solution you can use multiple Kinect and so have a complete 360-degree playground. Using only one Kinect, you’re forced to look in that direction, or Kinect tracking becomes unusable: if you try to play a VRidge+Kinect game and you look in the opposite direction of the Kinect, the tracking may get crazy. Maybe a ImmotionRoom services + VRidge could be a solution for this 😉

Now, go and try VRidge + Kinect… and if you liked this tutorial like it and share it with your friends!

UPDATE: I returned experimenting on this and I have to add two important things to this article.

The first one is about centering. When you select to start your VR experience through VRidge (for example, when you click on “Play SteamVR games”, put your phone so that it looks frontally your Kinect (I mean, the screen parallel to the one of the Kinect, with phone camera pointing towards the Microsoft sensor). Otherwise your phone will consider one direction as the forward one, while your Kinect will use one other and the positional tracking will become a complete mess.

The second one is about gyroscope. If you use VRidge through a Cardboard-like headset, you won’t have stabilized gyroscopes, so the rotation perceived by the headset will start drifting over time. In the end you’ll end seeing the forward direction to be another one from the starting one. Since you’re using Kinect that has instead a fixed reference frame, you’ll end up having a screwed up positional tracking. You can mitigate this using simple experiences that won’t require you to make abrupt head rotations. Again, it’s another problem we too have in our full body virtual reality solution.

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.