How to get started with Oculus Quest hands tracking SDK in Unity

Welcome to 2020! I want to start this year and this decade (that will be pervaded by immersive technologies) with an amazing tutorial about how you can get started with Oculus Quest hands tracking SDK and create in Unity fantastic VR experiences with natural interactions!

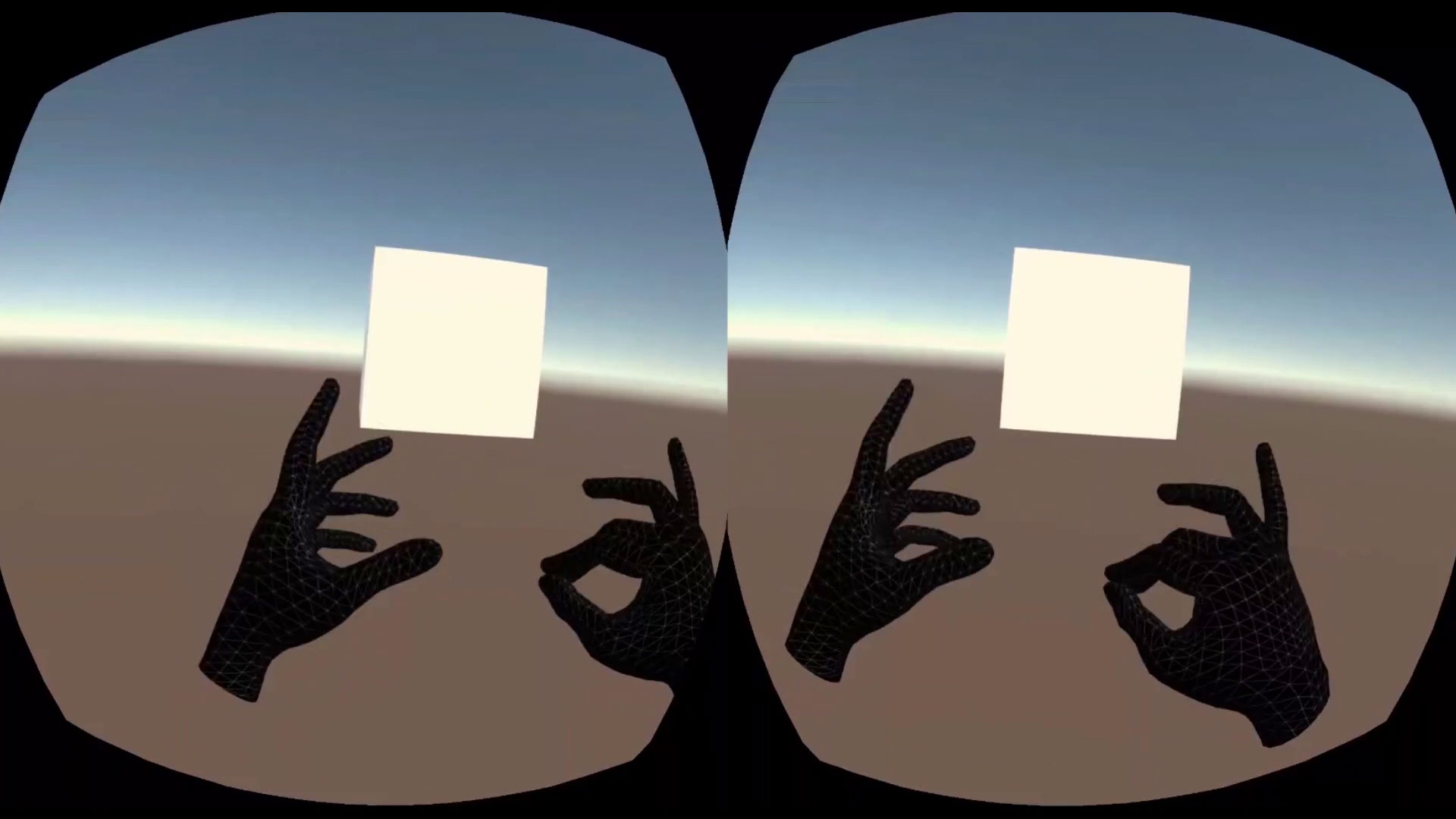

In this tutorial, I will teach you how to download the SDK and create a mesmerizing application where you can change the color of a cube by touching it with your index fingers (left index will make it blue and the right one will make it green) and by pinching the middle with the thumb fingers of the left hand (that will make the cube red). Isn’t this the killer app of VR?

I will make the experience in two different ways, using both the standard components and also the interaction engine of the Oculus SampleFramework, highlighting the pros and cons of both solutions!

Are you ready?

How to get started with Oculus Quest hands tracking SDK in Unity – Video Tutorial

If you like video tutorials, I have made a very long one for you, explaining everything you need to know on the Oculus Hands Tracking SDK: where to find it, how it is made and how to integrate it into your Unity project, in two different ways. I explain all the code by voice and show you the final results all live. It is a step by step guide that will make you a hands-tracking-SDK master!

You can find the scripts needed in the project in the PasteBin snippets below in this article, or in the description of the video.

How to get started with Oculus Quest hands tracking SDK in Unity – Textual Tutorial

Prerequisites

If you’re following this tutorial, I assume you already know:

- How to install Unity and all the Android Tools;

- How to put your Quest in developer mode;

- How to develop a basic Unity application for the Quest.

If it is not the case, don’t worry, you can follow this other tutorial of mine that covers all the above topics! When you finish it, you can come back to this one 🙂

Project creation

Let’s create a new Unity 3D project, and call it TestQuestHands. If you’re interested, for this tutorial, I used Unity 2019.2.10f1.

As soon as Unity loads, let’s switch the environment to Android: File -> Build Settings… and in the window that pops up, click on Android and then on the “Switch Platform” button. Technically, you can also switch later on, but if we do it immediately, we avoid importing the resources twice.

Let’s change some settings. In the same Build Settings popup, hit the “Player Settings…” button in the bottom-left corner. In the Project Settings that will popup, change the “Company Name” to your company (I’ve used “Skarred”), and check that the Product name is “TestQuestHands”. Expand the “Other Settings” tab down below. In the Graphics APIs part, click on Vulkan and then click on the little minus sign below it to remove Vulkan support (this has given me some problem in the build process, so I decided to remove it). Then scroll down to the Identification section and check that the package name is something like com.Skarred.TestQuestHands (You should have the name of your company in place of Skarred). In the Minimum API level select Android 4.4 (API 19).

Where to find Oculus Hands Tracking SDK

I’ll tell you a secret: there’s no place where you have to look for the Hands Tracking SDK. It is embedded into Oculus Unity SDK. So go on Unity menu and select Window -> Asset Store (or press Ctrl+9). In the search bar, type “Oculus” and choose the “Oculus Integration” package that the asset store will offer you. Update it if necessary, and then import it into your project. If some popups ask you to import stuff, update stuff, or restart Unity, you agree with everything.

At the end of the process, you should have the Oculus plugin inside Unity.

First thing first, to avoid forgetting this later on and then see a black display every time you build your application: go to File -> Build Settings… select Player Settings… , go down and expand the “XR Settings” tab. Check the “Virtual Reality Supported” flag. Then in the Virtual Reality SDKs section, click on the “+” sign and choose “Oculus”. The Oculus SDK should be selected as the VR SDK to use.

Back to the project: inside the Assets\Oculus folder, you should find the VR subfolder that now contains also scripts and prefabs for basic hands tracking interactions (you can check the scene Assets\Oculus\VR\Scenes\HandTest to see a basic integrations of the hands in Unity), and the SampleFramework folder with the famous example with the mini-train and the 3D buttons that you have surely already seen online (you can check the scene Assets\Oculus\SampleFramework\Usage\HandsInteractionTrainScene for a more complicated sample using hands in Unity).

Hands Tracking Implementation n.1 – Basic components

Let’s now implement the hands in our application using the standard way, the one that is advised in the Oculus SDK docs. It is very rough, but it works.

- Open the scene Assets\Scenes\SampleScene (Unity should have created it for you)

- Remove the Main Camera gameobject

- Put in the scene the OVRCameraRig prefab that you find it Oculus\Assets\VR\Prefabs

- Select the OVRCameraRig gameobject you have just added to the scene. In the inspector, look for the Tracking Origin Type dropdown menu (in the Tracking section) and choose “Floor Level”

- Always in the inspector of OVRCameraRig, look for Hand Tracking Support (in the Input section) and in the dropdown select “Controllers and Hands”. This will configure the manifest to use hands tracking or the controller. Technically, you can also choose “Hands Only”, but Oculus says that if you do this way, at the moment your app will be rejected from the store

- Add a cube to your scene (use the little Create menu in the Hierarchy tab and select 3D Object->Cube)

- Select the cube, and in the inspector, change its Transform so that its Position becomes (0; 1.25; 1) and its Scale 0.35 on all axes. This will be the cube we will interact with our hands

- Look for the Box Collider component in the Cube and check the Is Trigger flag

- Expand the OVRCameraRig gameobject, and expand its child. Also expand “LeftHandAnchor” and “RightHandAnchor”

- Put in the scene the OVRHandPrefab prefab that you find in Oculus\Assets\VR\Prefabs, as a child of LeftHandAnchor. In the inspector of this new gameobject, look for the script OVR Skeleton and check “Enable Physics Capsules”. This will add a capsule collider and a rigidbody to every bone of the hand, so that we can detect its interactions with the other objects of the world.

- Put in the scene the OVRHandPrefab prefab that you find in Oculus\Assets\VR\Prefabs, as a child of RightHandAnchor. Select it, and in the inspector, change all references from Left Hand to Right Hand (there should be three). Also here, look for the script OVR Skeleton and check “Enable Physics Capsules”.

Compliments, you have just added your hands to your VR application! The OVRHand Prefab will take care of showing them. Time to implement some interactions.

Create a new subfolder of Assets and call it Scripts (you can use the Create menu of the Project window to do that). Inside it, create a new C# Script and call it “Cube”. Assign the Cube.cs script to the Cube gameobject, by dragging the script onto the gameobject.

Double click on the Cube.cs script to open it in Visual Studio and then substitute its code with this one:

The code is very much commented, but some notable details are:

- I had to get the references to the OVRHand scripts by looking for the gameobjects in the Start function, because there’s not a smarter way to get them;

- In the Update method, I can check if the middle and thumb fingers are pinching by calling the GetFingerIsPinching method on the OVRHand behaviour;

- In the method GetIndexFingerHandId, I understand if the collider entering or exiting the cube is the tip of an index finger by querying the gameobject name. Capsules added to the bones all have the name made by the bone name plus “_CapsuleCollider”. I had to look inside Oculus implementation to see how the objects are named. I haven’t found a smarter way: it seems that there is no script telling you that a gameobject is a part of one hand. It is weird. Even all the scripts managing the hands have the property telling if they belong to the left or right hand set as private, so I had to perform the check with the IsChildOf method of transform. I think Oculus has to improve its SDK to make these interactions easier to be implemented (or document how to implement them).

All the rest is just logic to change the color of the cube… standard stuff if you already know how to use Unity. At this point, we’re ready to build! Save everything, and connect your Oculus Quest to your PC. Then select File -> Build Settings… . Hit the “Add Open Scenes” button, then click on Build and Run. Select the name for your APK, like “OculusHands1.apk”, let it build and then… BAM! You have your first Oculus Quest hands tracked app!

What if you can’t see your hands?

The first time I executed an app this way, I couldn’t see my hands. There was the cube, but no matter how I moved my hands in front of the headset, I could only see my controllers.

It is because you have to activate hands tracking before using your hands tracked app. You can follow this tutorial of mine to understand how to do that. Even after hands tracking is unlocked in the settings, you have to enable hands input to use your hands. So, hit your Oculus button on your Oculus Touch controllers, select Settings in the menu, and in the Settings tab, click on “Use hands” and then put down your controllers without pressing any key (every time you press a button, you activate Controller input).

After you have your hands in the Oculus UI, you can select Resume and return to your app. This time you should see your hands! And they can make the cube become red, green and blue!

The standard method works, but I find its implementation very rough, and suitable only for little experiments, or for integrating the Oculus Hands Tracking SDK with other hands libraries’ interaction systems (e.g. the ones from Leap Motion). For more complex interactions, you had better create your own library on top of it or modifying the integration of the Sample Framework, that is the method that I’m going to explain to you very soon.

Hands Tracking Implementation n.2 – Oculus SampleFramework

Let’s create a new scene that instead implements the Oculus SampleFramework interaction system, that is more high-level and much more usable in my opinion.

- Create a new scene in the Assets\Scenes\ folder, and call it SampleScene2 (You can use the Create menu in the Project tab for this)

- Open the just created scene

- Remove the Main Camera gameobject

- Put in the scene the OVRCameraRig prefab that you find it Oculus\Assets\VR\Prefabs

- Select the OVRCameraRig gameobject you have just added to the scene. In the inspector, look for the Tracking Origin Type dropdown menu (in the Tracking section) and choose “Floor Level”Always in the inspector of OVRCameraRig, look for Hand Tracking Support (in the Input section) and in the dropdown select “Controllers and Hands”

- Add a cube to your scene (use the little Create menu in the Hierarchy tab and select 3D Object->Cube) Select the cube, and in the inspector, change its Transform so that its Position becomes (0; 1.25; 1) and its Scale 0.35 on all axes. This will be the cube we will interact with our hands

- Look for the Box Collider component in the Cube and check the Is Trigger flag

- In the Project tab look for the Assets\Oculus\SampleFramework\Core\HandsInteractions\Prefabs\Hands prefab and add it to the scene. This will magically give you your hands in the scene (with the style you choose), plus a lot of facilities in the code to query hands status

- Also add to the scene the Assets\Oculus\SampleFramework\Core\HandsInteractions\Prefabs\InteractableToolsSDKDriver prefab. This is the secret sauce that will give you an easier interaction engine

- Select the gameobject you just added, and in the inspector, in the InteractableToolsCreator behaviour, leave only one tool for the Left and the Right Hand, and that tool must be FinterTipPokeToolIndex (that is a prefab that is in the same folder of the Hands prefab). This tool will attach to the index finger of both hands and lets them interact with some specific objects in the scene

- In the Assets\Scripts folder, create a new script called Cube2.cs, and substitute its code with this one

- In the Assets\Scripts folder, create a new script called ActionTriggerZone.cs, and substitute its code with this one

- Add the script Cube2.cs and then ActionTriggerZone.cs to the Cube gameobject

- We have to fix a little bug in the Oculus plugin to make everything work. Look for the file “BoneCapsuleTriggerLogic.cs” (you can use the search box of the Project section for this). Open it and substitute every reference to “ButtonTriggerZone” with “ColliderZone”. They are on line 43 and 52 at the time of writing. ButtonTriggerZone is a subclass of ColliderZone, and by making this substitution, we make the interaction system to work with all subclasses of ColliderZone, and not only with the ButtonTriggerZone one. Trust me that it is the right thing to do 🙂

Ok, the project is completed, so let me explain how it works. The Hands gameobject offers an easy management of hands, and in fact in the script Cube2.cs, I can just query for the pinch status by calling a method on Hands.Instance.LeftHand, without having to search for gameobjects in the scene. That’s very handy (pun intended).

The interaction system that triggers the cube is handled by the InteractableToolsInputRouter, that does the magic. In the scene there are some elements, called “Tools”, that represent some tools that manage the input of the hands in this case, and other elements called “Interactable”, that can perform some actions when triggered by the Tools. So, an “Interactable” may be a button, and a “Tool” may be the fingertip of a hand.

We have added in the scene two tools, thanks to the InteractableToolsCreator script, and both are FinterTipPokeToolIndex prefabs. If you dig into Oculus code, you discover that this tool is basically a sphere that follows the tip of one finger (the Index finger, in this case) and that interacts with Interactable elements by contact (other tools can interact by distance using ray casting).

You can associate some interaction zones to every Interactable element. These zones define different regions where the Interactable is sensitive to the action of the Tools. For instance, you may have a proximity zone that just signals to the Interactable that a tool is nearby, or an action zone that signals to the Interactable that it must activate when a tool is in. These interaction zones are some colliders with associated a ColliderZone script. Our ActionTriggerZone script is just a special ColliderZone that takes the collider of the cube, and when a tool enters it, it signals to the Interactable on the same gameobject (that is, our Cube2 script), that it must activate itself.

Cube2 is just an Interactable script, that implements the UpdateCollisionDepth abstract method of its parent class. This method gets called to notify the Interactable of a new status of the tools interacting with it (e.g. a tool has entered the action zone, or the proximity zone, etc…). Inside this method, we implement the logic of coloring the cube if we detect that the tool entering the action zone is an index finger of the left or right hand.

Notice that Interactable has also a handy InteractableStateChanged action that can be used to notify other gameobjects of a new status of the Interactable (and so a button activated with the hands can trigger other elements in the scene). That’s why I say that this method is far more flexible and easy to be used: just modifying some scripts already provided by Oculus, you can implement most of the hands interactions you need in VR.

That said, it’s time to build our app!

Select File -> Build Settings… . Remove the SampleScene scene from the bulid settings and hit the “Add Open Scenes” button to add the current one, then click on Build and Run. Select the name for your APK, like “OculusHands2.apk”, let it build and then… BAM! Again a handy cube! Notice that the hands have a different visual aspect, and also have a colored sphere on the index fingertip, that is the tool that we just added.

Further steps

After you’ve made this simple app, it’s time to unleash your fantasy!

To study more the Oculus Hands Tracking SDK, I advise you to:

- Check the official documentation page: https://developer.oculus.com/documentation/quest/latest/concepts/unity-handtracking/

- Check the sample scene that you can find in Assets\Oculus\SampleFramework\Usage\HandsInteractionTrainScene and study how all the interactions have been implemented

- Check the awesome Unity plugin that Chris Handzlik has made to let you test hands tracked apps inside Unity in play mode: https://github.com/handzlikchris/Unity.QuestRemoteHandTracking

I hope you liked this tutorial and that it will be useful to you to create amazing apps on the Oculus Quest! If you want to support my work in creating high-quality articles on AR/VR, please subscribe to my newsletter and donate to my Patreon account!

Thank you and happy 2020 in VR!

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.