Threedy AI automatically converts 2D photos in 3D assets for AR!

Threedy AI is a startup whose main expertise is the automatic conversion from 2D photos to 3D models. It can automatically fetch the photos of your items from your website, and transform them into 3D models for prices as cheap a $5/model! It is a very innovative company, and I think that it can be very important for the transition between 2D and 3D.

I’ve had the pleasure of interviewing Nima Sarshar, the CTO of the company, and talking with him about what they are doing, how they do it, and what is their vision of the future. It’s been a great talk, and if you have the time, I advise you to watch the video here below!

Otherwise, keep reading for the usual summary of the key points of the interview 🙂

The transition from 2D to 3D

At the European Innovation Academy, some years ago, I met with José, a Portuguese investor. He told me that he became rich by creating analog/digital converters: he noticed that all the world is analog, but in those years, everything was transitioning to digital, so he had the smart idea of creating the products that could be the bridge between these two worlds and he made a very profitable business out of it.

Now I think that we are in another transitional moment: from a digital world in 2D to another one that is fully spatial, in 3D, thanks to AR and VR. This transition can be very painful as well: for instance, we all dream of shops becoming fully virtual, of e-commerce websites all implementing AR, but this has a huge bottleneck: the creation of all the 3D models. All the most important shops, like Amazon, eBay, etc… have plenty of 2D pictures of their items, but very limited access to 3D models of them, and this is a big problem to implement immersive solutions. And if Amazon can (maybe) have a huge AI in-house do that, medium-sized companies don’t have these means, and they should ask a 3D artist do the work for them, with huge upfront costs. As a consultant, I’ve found myself in this situation many times, and I lost many customers because just to have an AR shop they had to spend 20-50K upfront only for the 3D models and it was a too big expense for them.

But luckily there are some companies that, like the Portuguese investor, had the intuition of trying to create a bridge between the two different worlds, and among them, one of the most interesting ones is Threedy AI.

Meet Nima Sarshar and Threedy AI

Nima Sarshar was working at Apple when ARKit was being developed and he immediately understood the possibilities that AR offered, especially for e-commerce, because AR is great to try things at home, and can make the life of customers better. But he immediately realized that “3D content problem would have been the issue”: to offer this AR feature, shops and stores would have needed the 3D models of all their objects, something that most of them lack.

He understood that the world would have needed an automated solution to create 3D models in a fast and cheap way, so he dropped out from Apple, and with a team of talented people, he co-founded the startup now known as Threedy AI. He has worked since then to exploit AI and ML to create 3D models automatically from their corresponding 2D photos, an item that online shops already have.

How does it technically work?

“We had no idea on how to solve this problem after we had raised the money” candidly said Nima to me. They started with the usual deep learning approach, where basically you try to infer the 3D shape of an object by creating dynamically a shape whose projection becomes similar to the images that you already have, but the results were pretty unsatisfying.

They so started thinking about an alternate approach, also because we as humans do not reconstruct in our mind the 3D shape of an object from a photo by imagining a shape that can fit a projection. So they thought that most objects are made of parts, that usually have a simple shape. If an AI could understand what parts a single object is built of, then it could reconstruct the whole shape of the object.

Threedy AI so has built a database of 10,000 possible shapes and thanks to a patent-pending machine learning technology, every time it is fed a photo, it tries to reconstruct which mixing of the preset simple shapes can give a projection that is compatible with the photos provided. According to Nima, this gives far better results.

After a shape has been found, the second problem becomes finding the material of the object. Here Threedy AI has two different approaches:

- Finding the material that looks most similar to the one in the picture from a big database of preset materials (again, a problem of machine learning and classification)

- Reconstructing the texture of the object by flattening it. Since from the previous step you know the shape of the object, you can try to flatten the texture of its parts from the pictures, and try to reconstruct the original material.

The startup is still working on understanding what is the best approach. Of course, everything is so cutting-edge that both the 3D shape reconstruction and the 2D material detection is under heavy research and gets constantly refined.

What is the accuracy of this solution?

Of course, this algorithm shines for objects that are composed of parts and get assembled, like for instance furniture. It also works with objects that are molded. On softer shapes or with organic elements (e.g. plants, animals, etc…) at the moment is not ideal, but Nima told me that their goal is expanding its reach in the future.

With objects composed of parts, the system in the fully automated way reconstructs correctly 75-80% of the provided objects. 10-15% are ok-ish and require just some manual intervention. The remaining 5-10% must be re-built completely by hand. I think that it is already a good percentage to start, but of course, it must be fixed over time, because, as Nima says, the manual intervention part requires human work that is orders of magnitude slower than the ML one, and so it slowers all the process.

The cost per model

The cost of a 3D model of a piece of furniture is around 60$ if you have a delocalized 3D team, and can be around $100-150 if you go through a professional agency. With Threedy AI, the cost is around $5-15 per model, and the more the automated process works, the more the price goes towards the $5 limit. This means huge savings for all those companies wanting to convert all their 2D items into 3D assets in bulk. The service is intended for medium-big sized businesses (you can’t request just a single model and expect to pay it $5 of course), but there are some specialized packages for small businesses as well.

You can see a gallery of converted models on Threedy AI website so that to evaluate their quality: https://www.threedy.ai/model-gallery

Beyond 3D models

The startup has quickly realized that just providing 3D models was not enough, especially because creating a scalable business selling cheap conversions is not going to be sustainable (after everyone has made the conversions, the startup loses all the market), so Threedy AI has worked on understanding what kind of services to provide with these models.

The first one that it offers is of course a 3D model management service. This is not unique in the market, but it is anyway very useful for the companies that are using these models inside some stores to search, catalog, and manage in general the 3D assets they own.

The second one is a solution that the stores can offer to customers to let them try their 3D assets inside their environments to see how they fit. It is like an off-line AR try-on feature, and they call it AR++.

AR++

Augmented Reality is cool to let you try objects in your space before buying them, and many companies like Amazon and IKEA are already exploiting it. Thanks to AR, you can visualize how a model fits inside your space and watch it from all angles.

But, Nima says, this works only at the end of the sales funnel: you have already decided to buy a sofa, and so you want to try it in AR and see how it fits. But what about the beginning of the process, when you are maybe in the office during the lunch break and you’re exploring possibilities for your bedroom? What if you’re just in bed with your wife and you want to choose a piece of furniture? And also, what if you don’t have an AR-enabled phone?

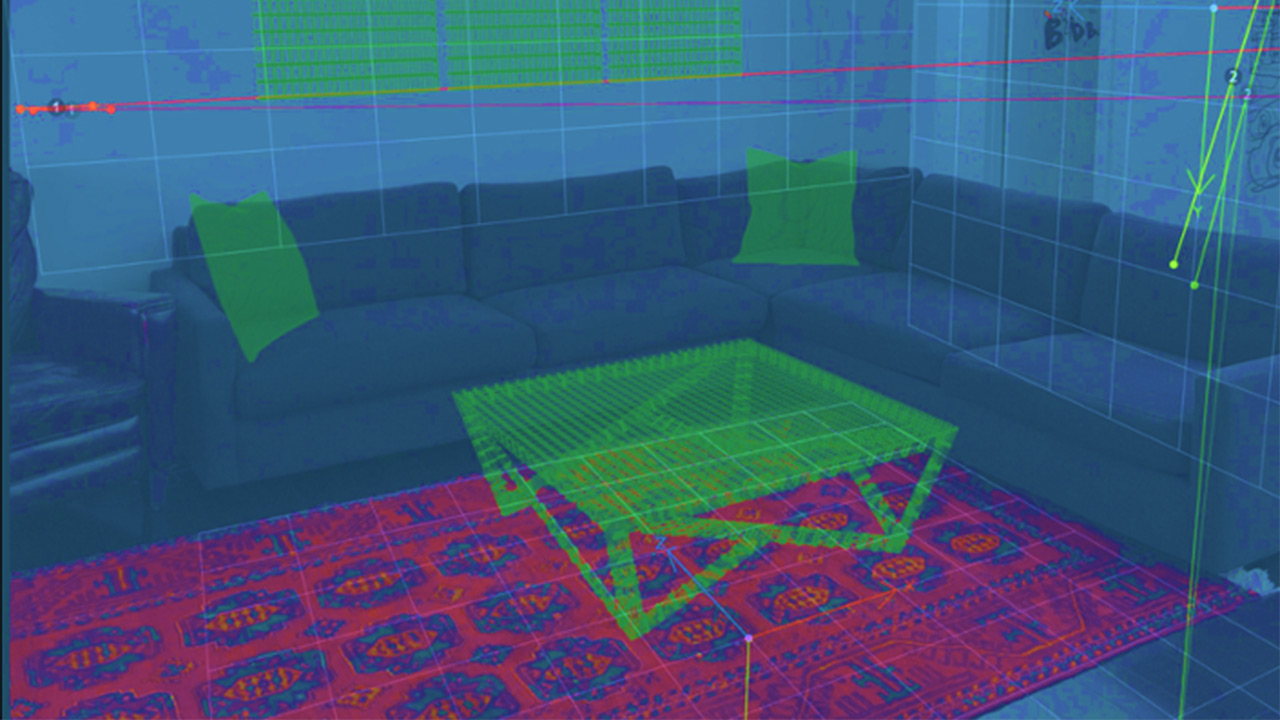

Threedy AI has thought about a different kind of try-on feature that works offline. You take a picture of your room and feed it to the system. The system immediately understands where there are the walls, the floor, and also the lighting of the scene. After that, you can browse the shop, and put the various 3D models of the furniture inside this picture, in a context-aware way. This means that if you put a table inside the scene, the table immediately finds the floor and can only move on it. It also lights according to the light that comes from the window that you have framed in the picture. You can also put paintings and other decorations on the walls for instance. Threedy AI is also working on making you substitute the objects: that is, if you are buying a new bed, thanks to this system you could put a new bed in the photo of your room on top of the previous one and the system would erase the previous bed so that to make you see how the new one fits with the rest of the room. This kind of diminished reality is quite hard to do, so it is still a work in progress.

You can put all the models that you want, and move them until you have found the perfect configuration. In the future, the system may also suggest how to furniture your room, but for now, what you can do is taking a snapshot and sharing it with your friends so that to hear their suggestions.

Imagine it as a room configurator as other shops already have, but that works automatically with a single picture of your room. Using it, you can, wherever you are, try how models fit inside your room… and when you’re almost sure about what to buy, you can try it in AR live inside your space.

Automatic conversion with just one tag

What if you already have a shop and want to try Threedy AI to convert all the 2D photos of your items into 3D models that be explored in AR or put in the AR++ configurator? How can you do that? Well, easier done than said: you just put a special javascript tag inside your webpage and then you can go sleeping.

The javascript magic will parse all the 2D images, send it to the 3D-converter system on the cloud, and return the result. On your account page on Threedy AI, you will see the details of the process, and you will be able to approve the models or reject them in case you’re not satisfied (in this case they will be modified by hand). You will also be able to manage all your assets from there. After the 3D models are ready, they can be put on your store page in place of the 2D pictures, with two buttons that enable the virtual try-on and the AR try on.

So with just a script, you can transform your standard store in one that is ready for the future.

Threedy AI is currently able to churn 5000 models/week. And for big customers that want to implement its system, it offers the possibility of having 215 models for free (for an estimated value of around $25000 if they were done through a 3D agency), with no strings attached, to see if the results are satisfying enough. For smaller customers, the offering is a bit different, and usually, they’re offered fixed-price packages.

They’re going to launch with the first store developed with a partner before the end of the year.

Future perspectives

Nima told that in the future he wants to become “the king of content”. They don’t intend to build the new ARKit, not even the final AR/VR applications. Threedy AI wants to offer the possibility of having high-quality content, of managing it in the best way possible, and of being able to analyze it in its final context. And I really wish them good luck for this venture of theirs.

References

If you may be interested in the services offered by Threedy AI or you want to invest in this startup, go to its official website and contact them, or ask me for an introduction!

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.