OpenBCI: games using brain-interfaces are coming in 3 years

You know that I have a big passion for Brain-Computer Interfaces, a passion that has grown even more when I understood they are a perfect match with AR and VR (I have even dedicated a long article in the past about this). That’s why I feel very honored to host on my blog OpenBCI, a famous company aimed at building neural interfaces that have not only a very high quality, but are also opensource.

I had the pleasure to sit down with Conor Russomanno, the CEO, and talk with him about the new product they’re building, dubbed Galea, that is being marketed not only as a disruptive innovation for BCIs but also as a product that has been specifically designed to work with XR technologies. These days, Gabe Newell from Valve has talked a lot about how he is a believer in BCIs and how he partnered with OpenBCI to shape the XR of the future. I think that GabeN has overhyped a bit the technology, but Conor confirmed to me that BCIs are coming sooner than most people think, and a first version could already be used by consumers in 3 years (this is more or less coherent with what Andrew Bosworth told about the integration of BCIs in Facebook’s headset). He also confirmed the collaboration with Valve, and the fact that the first version of Galea will be fully integrated with the Valve Index.

Isn’t this amazing? Yes, it is. Read all the interview here below to discover all the details!

Hello Conor, can you introduce yourself and OpenBCI?

My name is Conor Russomanno, and I’m the CEO of OpenBCI. OpenBCI has been making open-source tools for neuroscience and biosensing since 2014.

Brain-computer interfacing (BCI) is making science fiction a reality. Our team has been researching and developing open-source hardware and software to help our larger community of independent researchers, academics, DIY engineers, and businesses at every scale create products that bring us closer to solving society’s greatest challenges, from mental health to the future of work.

What is Galea, the latest product you have announced?

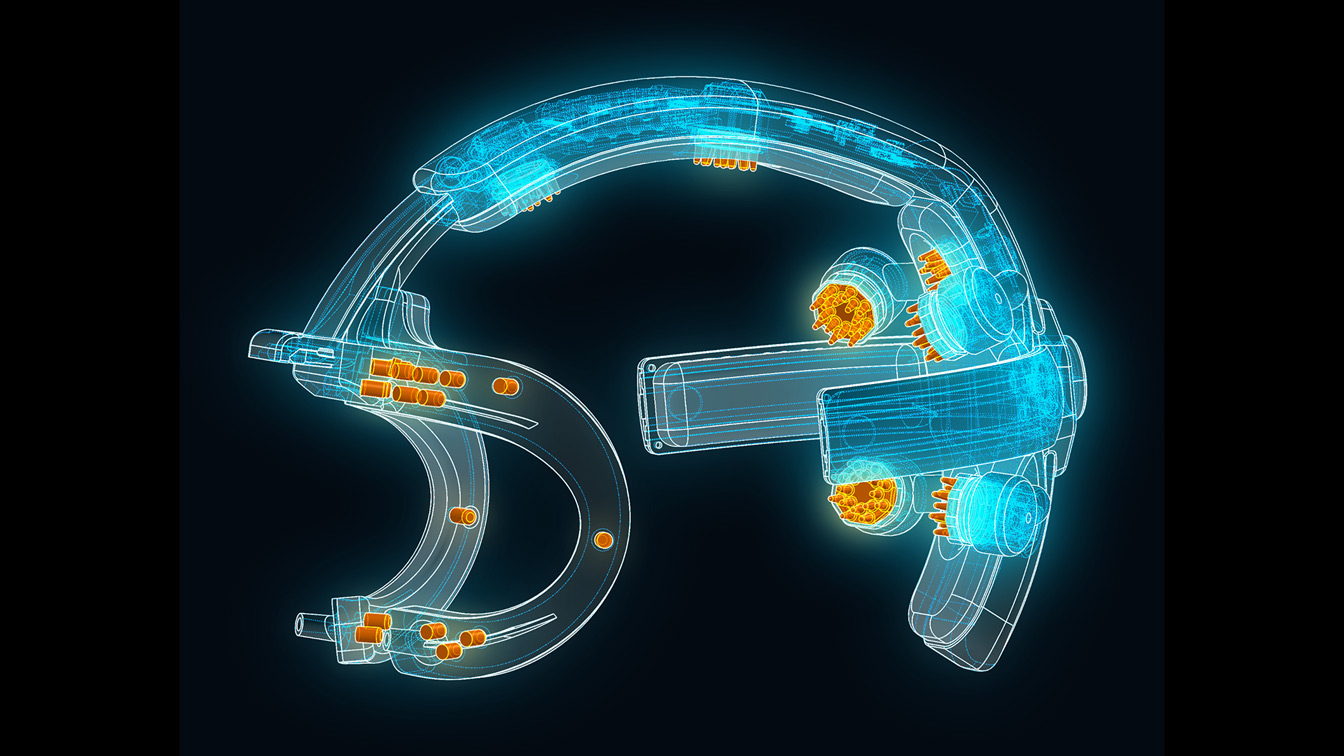

Galea is a hardware and software platform for bringing advanced biometrics to HMDs. The Galea hardware is designed to be integrated into existing HMDs and is the first device that simultaneously collects data from the wearer’s brain, eyes, heart, skin, and muscles. Our current version includes sensors for EEG, EOG, EMG, PPG, and EDA, with the option to include image-based eye tracking as well. On the software side, we’ll be enabling users to bring tightly time-locked data into development engines like Unity and by building on open-source protocols like BrainFlow, the raw data will be available in most common programming languages as well.

Galea is the culmination of OpenBCI’s six years (seven in February!) of research and development in the BCI space, and was created with the goal of providing fully immersive and personalized experiences through various streams of biometric feedback. This product makes it possible to better comprehend how an individual reacts to digital worlds and experiences in real-time.

Why have you decided to develop it?

OpenBCI created Galea based on our observations about trends in the neurotech/XR space and feedback from our customers and partners. I briefly spent some time working at Meta to try and integrate OpenBCI-based biosensing into their headsets and while in San Francisco, I got to know Guillermo Bernal a bit better, who was investigating some of the same things as part of his Ph.D. work at MIT Media Lab. We both believe that the future of computing will be more closely tied to the physiology of individual users and make use of more than just our eyes and our thumbs.

OpenBCI has also watched our customers integrate our existing products into AR and VR headsets for years. In several of the surveys we’ve run, integration with HMDs has been a top request.

We saw an opportunity to continue our mission of democratizing access to the brain (and body) by creating a new kind of device for researchers and developers looking to explore the intersection of mixed reality, biometrics, and brain-computer interfacing.

Why did you need 6 years of research to design it?

As it turns out, making science fiction a reality takes time. Galea is a compilation of what we’ve researched and developed since the start of OpenBCI, and the culmination of our larger goal that we’ve been striving toward during this time—ethical, open-source access to BCI development. Measuring so many aspects of the brain and body in VR and MR for the first time, and getting it all right, is a years-long process.

I’ve read that Galea supports many sensors: will they be available all together, or they are the ones supported by the platform and then everyone decides which ones to use?

The initial version of Galea will have all sensors (EEG, EOG, EDA, EMG, and PPG) fully-integrated into the Valve Index. There will also be an option to include image-based eye tracking, in addition to the EOG-based eye tracking.

Our goal with this first version of Galea is to give researchers, developers, and creators as many options as possible for understanding and augmenting the human mind and body. Future versions may include an a-la-carte option, and we may be able to do further customization for specific customers.

In what do you think that Galea is better than the competition (e.g. Neurable, NextMind)?

Neurable and NextMind, while both established neurotechnology providers, are designed to only collect EEG data. In contrast, Galea will collect EOG, EMG, PPG, and EDA data, in addition to high-quality active EEG. We’ve also placed a huge emphasis on user comfort. We’ve put a lot of effort into alleviating pressure throughout the system with pads, springs, and other tricks we’ve learned over the years. We have been working closely with electrode manufacturers to test new shapes and new conductive materials to increase Galea’s comfort without compromising on signal quality. I think anyone with experience working with these types of sensors will be impressed with what the team has been able to pull off.

What data will be able to gather Galea?

So the raw data (EEG, EMG, EOG, EDA, PPG) collected by Galea from the user’s brain and body can be used to quantify all kinds of internal states. Attention, stress, and cognitive load are some of the more proven examples of more subjective states that can now be quantified with physiological data. Emotional classification is another application that more and more researchers and companies are pursuing with this type of data.

Based on my observations, the best algorithms for accurately classifying emotions incorporate multiple types of data, not just EEG or any other single input. Galea’s APIs will make it easy to bring the raw data into a variety of programming languages (like Python, C++, Java, C#, Julia) and we’ll also be including software tools for researchers and XR developers to create immersive experiences tailored to the physical reactions of an individual user.

What are its possible applications? Can you make us some examples?

There are obvious applications in the world of entertainment. I’m personally excited about the idea of creating choose-your-own-adventure style content that detects and reacts to an individual’s level of attention or emotions. We’ve also talked a lot around the office about zombie or horror games that react to your heart rate, building on the kind of thing companies like Flying Mollusk demonstrated.

There are also many applications in healthcare. Understanding a patient’s particular bodily response to a given experience could empower a physical therapist to adjust rehabilitation treatment. Similarly, motor-impaired individuals may be able to gain more control over prosthetics and other pieces of assistive technology.

VR has been an increasingly popular tool among neuroscientists as well. You can control experimental variables more easily in VR and set up scenarios that wouldn’t be possible in a lab or even the real world.

The possibilities are vast, with use cases in entertainment, medicine, work, education, and more.

On what hardware will it work? Can it also be compatible with standalone headsets like Quest and HoloLens?

The first version of Galea will be fully-integrated into the Valve Index. Our plan is to integrate with additional headsets, both VR and AR. I’m a big fan of the HoloLens 2 and admire what that team has been able to create. We’re actively discussing partnerships with other device manufacturers.

Will it be compatible with Unity and Unreal Engine for development?

Yes, it will be compatible with both Unity and Unreal via the BrainFlow SDK. Additional example demos and scenes showing how to use the core features of Galea will also be provided to beta partners.

Will it be open source? Will people be able to customize it according to their needs?

Yes, following the beta program, we plan to continue what we’ve done in the past and open-source the electronic designs so people can customize and iterate on the device.

I’m a huge believer in Open Source and think it is one of the best ways to ensure these technologies are developed ethically, and their gains are distributed evenly.

What are the expected price and release date?

Can’t answer those questions yet but I expect we will have more info for people next month. I encourage people to sign up for the mailing list at galea.co to be notified once that info is ready.

When do you think BCI will reach the state of sci-fi fiction like Sword Art Online?

I’ve always been more of a Ready Player One guy (the book more-so than the movie). It’s going to happen sooner than people realize, and it’s been crazy to see the explosion of consumer-oriented neurotech companies over the past 6 years. I think we’re going to see regular people experiencing games or other entertainment content using BCI within the next 3 years. Not sure exactly when the full-fidelity metaverse will emerge, but I hope when it does people can look back and say Galea was a part of its evolution.

What do you answer to people that are afraid of the use that companies may do with personal data gathered through BCIs?

The potential for misuse is always going to be present with a technological advance like this, along with the potential positive applications for science, rehabilitation, and communication. I think the most important thing a user can do would be to know the value of their personal data and to vote with their wallets. Don’t support companies with a history of privacy infringement. Be wary of any service that isn’t transparent about how your data is used, or give you controls to permission your personal data in a meaningful way. I think that latter point is something that could happen at the device level, or as part of a new operating system for BCIs.

If you had to give a piece of advice to someone that wants to do your job, what would you say to him/her?

Get hands-on with the tech now. Try recreating a BCI project or following one of the tutorials in the OpenBCI docs. Document your experience, where you got stuck, where you learned things. Share that with the world. Whenever someone applies to OpenBCI, it’s a big bonus if they have articles, repos, or examples of work they did with similar technology in the past.

Anything else to add to this interview?

A comprehensive understanding of how humans think and feel is only a few years away from being fully accessible to consumers—in fact, the use of biometric data in our smartphones and other connected devices is a testament to that. What we’re doing at OpenBCI is not only encouraging the momentum of BCI innovation happening now, but ensuring that this newfound knowledge of an individual is used for good and for the greater goal of solving societal challenges.

I really want to thank Conor for these great insights he has given to me about brain-computer interfaces! I hope they have a spare Galea to give to me soon ahah! If you too may need Galea for your work, don’t forget to register your interest at Galea.co.

It’s amazing to think that in 3 years we will have the first implementations of brain-control in consumer devices. It is a dream that becomes true! Are you excited as well? Let me know in the comments!

(Header image by OpenBCI)

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.