NVIDIA’s Dave Weinstein: AI integration will be fundamental for the future of XR

I had the great pleasure of talking with Dave Weinstein, Director of XR at NVIDIA, about the amazing job that NVIDIA is doing in the XR field. NVIDIA is investing a lot of resources for immersive realities, and its commitment to XR is visible in its products like Omniverse and the CloudXR SDK. I wanted so to check out with Dave what NVIDIA is working on in the present, but also what are its future perspectives. As I could imagine, his answers have not disappointed me, and I found it incredibly interesting especially his focus on the convergence of XR and AI.

Hello Dave, can you introduce yourself to my readers?

It’s great to meet you and your readers, Tony. I’m the Director of Virtual and Augmented Reality at NVIDIA. My team product-manages all of NVIDIA’s XR products, and we manage the relationships with NVIDIA’s XR partners.

What XR projects are you working on lately?

NVIDIA’s goal is to improve the reach and quality of XR across the consumer and professional ecosystems. You’re probably familiar with our CloudXR product – it’s our SDK for streaming XR experiences. CloudXR is exciting because it puts all of your XR content at your fingertips, no matter where you are, and it makes it possible to experience your most complex models on even modest XR devices. As long as you have access to a high-performance GPU back at your office or in your data center or through a CSP, you can stream your rich XR experiences anywhere.

Most recently, we’ve taken XR to the cloud. This took a lot of people by surprise, because, as we all know, VR is very sensitive to latency. But it turns out that by being highly responsive to networking conditions and by efficiently eliminating perceived latency, we’re able to deliver robust, high-quality XR streaming.

There are many reasons that XR streaming is exciting, and I’d like to highlight three of them. Number one, it lowers the barrier to entry. Put another way, it further democratizes XR. Everyone can be part of an immersive group design discussion when the price of entry is just an AWS account and a mobile XR device. Second, it raises the quality bar for realism – we want our immersive experiences to be as realistic as possible, and streaming from the cloud means we have access to all the computational power we need, whenever we need it. And third, it opens up new deployment and distribution models for our partners, such as XR-as-a-service for ISVs and portal-based streaming for secure content.

Let’s talk about Omniverse… could you please explain to us what it is? I think there is a lot of confusion in the communities about it…

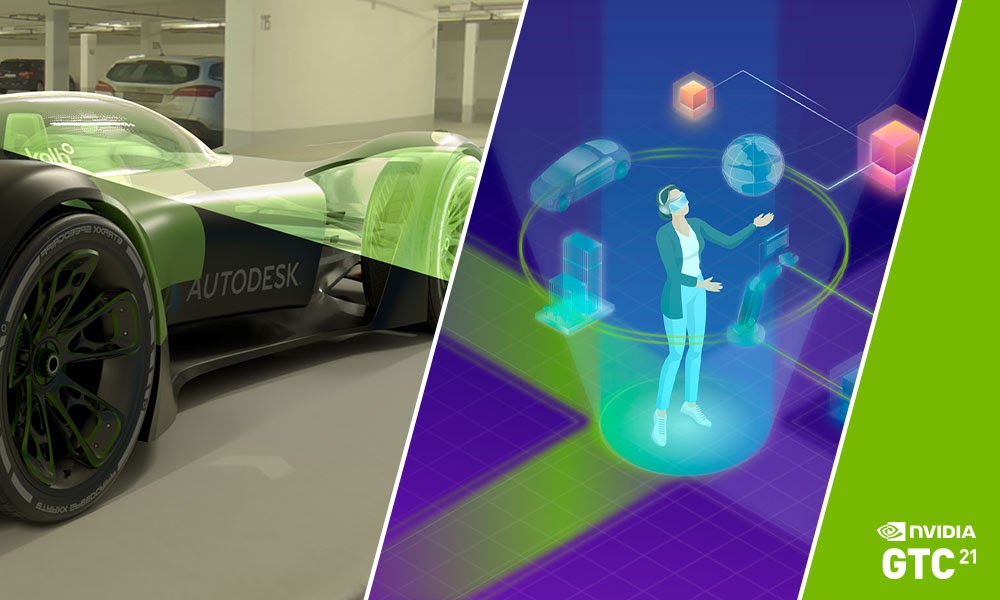

Omniverse is a simulator of 3D virtual worlds. To use an analogy, Omniverse Nucleus – the platform’s collaboration engine – is like the ‘Git’ of 3D assets and these virtual worlds. Omniverse provides ground truth simulation for use cases spanning across industries and scales of projects – whether you want to visualize and simulate an architectural model in real-time, or collaboratively iterate on a single car design – or simulate a perfect digital twin of a mass-scale factory.

We built Omniverse for ourselves, to help accelerate our design and development processes by connecting our teams and their preferred software tools; to build physically accurate virtual worlds and environments to train our autonomous vehicle and robot systems; and to provide a unified platform to deliver our core AI, graphics, and simulation technologies to our engineering, design, and creative teams.

We see Omniverse as part of the beginnings of the metaverse. Because it’s based on Pixar’s Universal Scene Description (USD), a powerful, flexible open-source framework, Omniverse will be the connector of worlds, whether different ‘worlds’ of leading industry software such as Epic Games Unreal Engine, or Autodesk Maya or 3ds Max, or Substance by Adobe; or one day, large scale virtual worlds like we see in popular video games today.

Why is a solution like Omniverse important? Who is using it? What are its advantages?

Today, 3D workflows are extremely complex. They require large, widespread teams with special sets of skills that require specialized software. The scale and complexity of systems, products, models, and projects that need to be modeled and simulated in 3D are growing exponentially. Omniverse is the solution to these challenges – enabling interoperability between disparate software applications, allowing skilled designers and engineers to continue working in their tools of choice, and providing scalable, GPU-accelerated physically accurate simulation for these complex data sets.

Omniverse fundamentally transforms 3D production pipelines. By enabling true real-time collaboration between users, teams, their tools, and asset libraries, it significantly speeds time to production, shortens review cycles, and lowers the vast costs associated with file imparity, storage of duplications, and time wasted on tedious import/export processes.

Today, we have over 50,000 individuals using Omniverse in open beta across industries and disciplines. We also have over 400 companies actively evaluating Omniverse including BMW Group who are using the platform to design, deploy and optimize a digital twin of their factories of the future, Volvo Cars who are using Omniverse to augment the customer buying experience of their cars, Foster + Partners and KPF who are connecting their global teams to collaboratively design innovative buildings.

NVIDIA offers many software services/SDKs that many developers don’t know about. What are the most important services that you offer that may be useful to XR developers out there?

Artificial Intelligence. Most of today’s XR experiences are built around decimated models with simplified materials and unnatural modes of interaction. Those were design decisions that developers had to make based on the tools and platforms they had access to at the time. But as those developers begin leveraging AI and streaming, it will be a total game-changer. NVIDIA has been building an extensive set of AI SDKs, including AI-based upsampling (DLSS), AI-based conversational interaction (Jarvis), and AI-based facial feature tracking (Maxine). As developers start integrating these features into their VR experiences, it will take immersion and interactivity to a whole new level.

What are the XR use cases your company has worked on that have amazed you the most?

As a company, we focus on creating enabling technologies that drive innovation across a multitude of industries. Our XR core technology stack started with the NVIDIA VRWorks SDK — a set of tools to accelerate and enhance all VR applications. We created fundamental technologies like Direct Mode, Context Priority, and Variable Rate Shading, which provided fine-grained control for VR rendering. But the technology innovation that’s amazed me the most has to be CloudXR, which, as I mentioned above, is our XR streaming SDK. The inspiration for CloudXR was straightforward —to stream XR experiences the same way we stream virtual desktops and games (GeForce NOW) — but everyone thought network latency would be a deal-breaker for immersion. Despite the uphill battle, our engineering team persevered and made it work — just an incredible tour de force. And the fact that it works as robustly as it does, even over distances of hundreds of miles, is simply amazing.

What could we expect in the XR field from NVIDIA in the upcoming 6-12 months?

Over the next 6-12 months, we’ll start to see the beginnings of the AI+XR integrations that I mentioned above. With the addition of AI for natural interfaces and digital assistants, and sensors and tools for engaging collaborating, we’ll see a major leap in the richness and ease-of-use that we’ve all been wanting in XR. We’ll be creating the foundational technologies for the metaverse, and we’ll start to see early examples of how incredible the XR future will be.

What is NVIDIA’s vision for AR and VR for the long term (5-10 years)?

AR and VR will be incredibly rich — in terms of content quality, but also in terms of sensor integration, and collaborative interaction. For that to work, the future of XR has to be split-mode: with highly latency-sensitive aspects of the experience running locally and computationally demanding aspects running in the cloud. We demonstrated a version of this split-mode approach with NVIDIA RTXGI, computing and streaming global illumination (GI) from a remote server to a lightweight local client that was rendering direct illumination and integrating the streamed GI. Similar approaches will emerge for physics and complex AI. An additional benefit of split-mode graphics is that it can amortize expensive computation during collaboration — world physics and indirect illumination can be computed once, centrally, and the results can be streamed to all participants.

You offer already many facilities like Variable Rate Shading, but almost no headset seems to implement foveated rendering. When do you think it is going to become more mainstream?

Foveated rendering is one of my favorite aspects of VR. It has tremendous potential to accelerate rendering, but optimizing the graphics and transport pipeline to maximize that benefit will require many coordinated improvements. The greatest win for foveated rendering will be in streaming scenarios, where the local headset transfers the gaze data to the remote server, the remote server efficiently renders the scene based on that gaze information (e.g. using ray-tracing and matching the ray sampling density to the user’s visual acuity across the visual field), the rendered frame is efficiently compressed and transported based on the gaze data, and finally, the image is decoded and displayed back on the headset. One piece of this scenario that hasn’t been discussed much is how the context of the experience can improve the accuracy of predicting gaze direction — when the bad guy jumps out from behind a tree, or when a professor starts talking to a class, we know where the user is going to look. Each of these pieces is complex, so it’ll take some time, but as they come together we’ll see more and more efficiency gains, which in turn will allow for richer and richer experiences.

Some years ago there was a lot of hype around the VirtualLink, this new standard invented to offer an easy connection between a PC and a VR headset, but then the standard never took foot. Can you explain to us why it has happened? And has it some possibilities of returning back?

VirtualLink is a great solution. By providing power, data, and video over a single cable, it dramatically simplifies the VR setup process. No more breakout boxes, no more extension cords. Unfortunately, the VR ecosystem was too fragmented to coalesce around this new standard. As we saw that fragmentation happening, we also saw 5G and All-In-One headsets coming over the horizon; so rather than continue to focus on VirtualLink we turned our attention to the challenge of how to remove the cables altogether — which resulted in our XR streaming SDK, CloudXR.

If you have to teach one lesson from your experience to someone that would like to do your job, what would it be?

Henry Ford said if he’d asked people what they wanted, they would have said faster horses. The early days of XR were defined by taking the existing graphics pipeline, optimizing it to hit 90Hz stereo, and shrinking it to fit in a headset form-factor. But the future of XR is about personalized spatial computing – tearing it all apart, mixing in new ingredients of AI, ray-tracing, streaming, sensors, and collaboration – and building something entirely new. The future of XR isn’t an evolution of the graphics pipeline, it’s the revolution of personalized computing. The lesson I’d pass on is to skate to where the puck is going to be. That’s what NVIDIA is doing; we’re building the platform for the future of XR.

Anything else to add to this interview?

I just wanted to convey how excited I am about how quickly XR is maturing, and I wanted to encourage all of your readers to get involved. Download Omniverse. Play with CloudXR. Build an XR experience. We’re building the metaverse, and we want everyone to participate.

I thank a lot David for the time he has dedicated to me (and to us) and I invite you to follow his advice: experiment with innovative XR stuff, and let’s build the metaverse together!

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.