Oculus Space Sense: how to activate it and hands-on it!

At Facebook Connect, Facebook/Meta announced that Oculus Quest was getting a new runtime update, the v34, bringing with it very interesting features to the Quest 2. Among these features, there was Space Sense, an update of the Guardian system that should help you in detecting objects and people that are coming into your play area while you are into VR. I have just received this update and tested this feature to see how it works, and here in this post, you can find everything about it!

What is Space Sense

Space Sense is an upgrade of the Guardian System. While the Guardian lets you just see in VR what are the limits of the play area that you have defined in the settings, Space Sense has the purpose of warning you when you risk hurting yourself by analyzing what is currently happening in the real world around you in real-time and showing you the silhouettes of the elements that are close to you. That is, it doesn’t consider the play area boundaries that you have defined, but it constantly analyzes your surroundings looking for something you may stumble upon when you are in VR.

If your doggo comes next to you while you are playing, your standard Guardian can’t detect it, while Space Sense can see it and it shows you a silhouette of it inside your VR experience, so that you can see that your dog is approaching you and you don’t risk hurting it. The same should happen with people entering your play space, and even with objects. According to Meta, Space Sense shows you everything that is around you up to 9 feet (around 2.75m) of distance.

I have tested Space Sense today, so let me tell you how to activate it and how does it work.

How to activate Space Sense

At the time of writing, Space Sense is an experimental feature. To activate it, you just have to go to the Settings, select the Experimental Features tab, and then look for Space Sense. Clicking on its label, you will enter in the Space Sense settings page, and you just click on its slider to activate/deactivate it. Notice that the feature has its own page in the settings, so I guess that in the future Meta plans to add more settings for it (e.g. for the sensitivity of the detection).

If you can’t find that feature, it means that you have not runtime v34 installed. You can proceed to update your Quest, if you can, and then activate it. If you can’t update your device to version 34 yet, it means that the update has not been rolled out to you yet, and you have to try again in the upcoming days.

It may also happen that the runtime activates it automatically for you. This is what has happened to me: I turned on my Quest today, and I started seeing the edges of the objects around me because Space Sense had been automatically activated together with the new runtime update.

Hands-on Space Sense

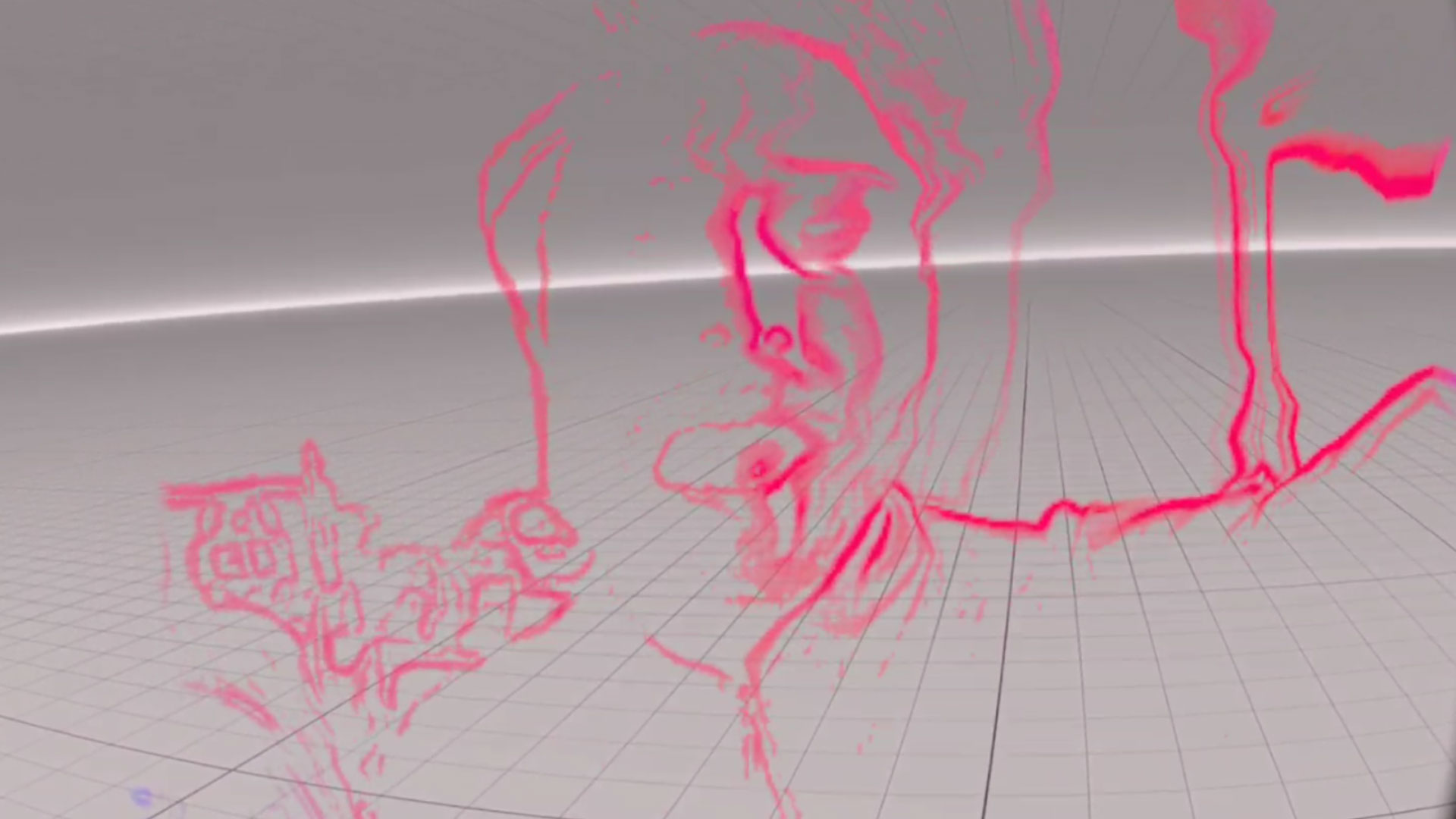

Space Sense shows inside your headset all the edges of the objects around you, up to 9 feet. It shows you the edges of every kind of element: not only the people, not only the moving elements. Everything. When I read about Space Sense, I thought there was some kind of AI that detected that there was a new element in your play area, and segmented its silhouette to show it to you, but this is not how it works. Space Sense is very dull in this sense: it just shows you all the edges of the objects that are within a certain depth.

Among these objects among a certain depth, there are also your hands and your arms. The system tries to cancel out the edges which are around a certain radius from your controllers (so to hide the edges of the hands), but this doesn’t always work and is even more noticeable when you are operating with your bare hands. So you can see also the edges of your own body in VR.

The lines are colored, and the color means how much that edge is dangerous for you: azure means that the edge is distant, so you have no risk of stumbling upon that object, while red means that the object is very close. In the middle, you have a full gamut of colors, with edges going from azure to violet to pink to red.

Space Sense also works with Oculus Link, and you can see the silhouette objects and people around you even when you are playing PCVR games. The colored silhouette is not recorded by the recording feature of the Quest or by Oculus Mirror on PC, but it gets detected by scrcpy, so also by recording via SideQuest.

I have used SpaceSense for my review, and then I have disabled it on my Quest, because in this first version, I find it terribly distracting. At home I don’t have a true VR room, I just have a bit of free space where I play VR, with other pieces of furniture around me (luckily, my situation in the office is better) and this is a situation that I share with many other European friends. This means that in whatever direction I look, there is always something within 9 feet of range, and this means that every time in my VR view, I see the lines shown by Space Sense. This is really annoying because it breaks the magic, it breaks the sense of presence. Wherever I look, I just see colored lines of my surroundings on top of the VR game, and this constantly reminds me that I am at home. Plus it also ruins the graphical experience of the game, and it is distracting for games where you have to be really focused on the visuals.

It is anyway amazing to notice when someone enters your field of view. I have asked a collaborator of mine to come close to me, and it was cool that I was able to see her silhouette appearing when she got close, and then disappearing when she went away. This works like a charm and it is great to offer more safety for people playing VR at home. But there is still a lot of work to do also for this functionality: the depth perception of the shown objects and people feels wrong, and the elements look a bit “flat”, as if they were all made of billboards (I guess this is an effect of the depth understanding algorithm that tries to infer the depth of the objects in the scene). Also, their perceived depth is different from the real one… it is hard to tell at what exact distance is a person by just looking at its silhouette shown by Space Sense.

Final impressions

Meta defines Space Sense as an Experimental Feature, and I totally agree with this definition. The current implementation is a good start, but I don’t think it’s ready for deploying it to everyone. I understand the technical hurdles behind segmenting a scene by depth, and Meta engineers have already done a lot of work, but it’s not enough. Seeing every edge of every object around you, including your own body, is terribly distracting and immersion-breaking. I find it very annoying and in fact I have disabled this feature. I think that at this moment it can only work if you have a proper “VR room”, that is a completely empty large space only dedicated to playing in VR: in this case, since there are no objects in your play area, you see no edges (apart from your arms, maybe), and so Space Sense only shows you the people and animals that enter inside your scene. But in all other cases, it may be a problem.

I think Meta has to work a lot in making Space Sense smarter, for instance only detecting the objects that are moving (a features-matching algorithm may help in this sense, showing only the edges close to a part where a movement of the features has been detected), or only showing you the silhouettes of people and animals (in this case, it would be required an AI/ML system to detect edges belonging to people and animals). It has also to improve the perceived depth of the elements inside, that at the moment doesn’t feel correct.

Anyway, it’s the first version, so I don’t want to be harsh: as I’ve told you, I know how doing this can be very difficult, especially in a constrained system like the Quest 2. Let’s say it is a good start. I’m sure that Meta is going to improve it and I can’t wait to try its future versions.

(PS While we wait for these future versions, would you mind sharing this article with your VR peers to inform them on Space Sense, too? Thank you!)

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.