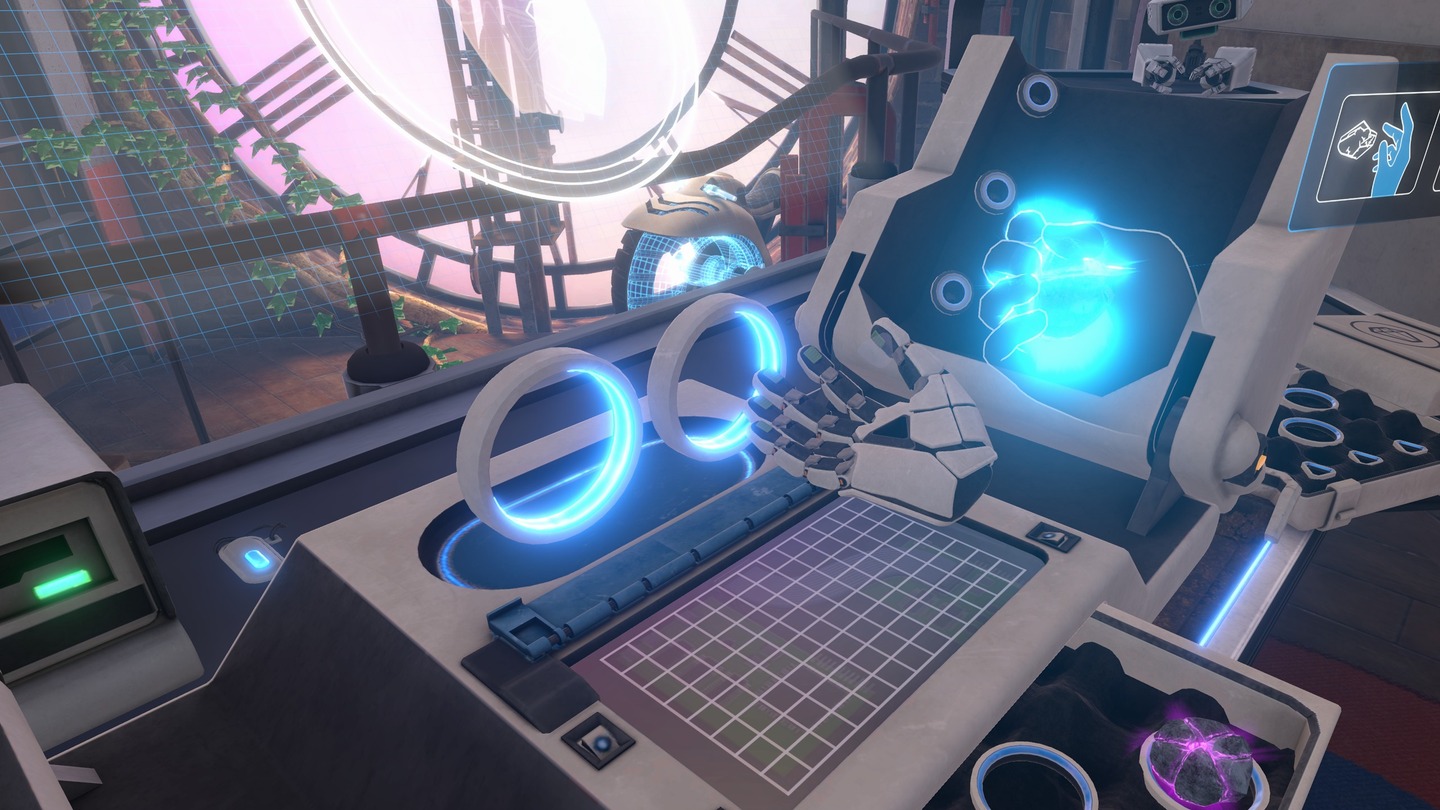

First Hand analysis: a good open source demo for hand-based interactions

I finally managed (with some delay) to find the time to try First Hand, Meta’s opensource demo of the Interaction SDK , which shows how to properly develop hand-tracked applications. I’ve tested it for you, and I want to tell you my opinions about it, both as a user and as a developer.

First Hand

First Hand is a small application that Meta has developed and released on App Lab. It is not a commercial app, but an experience developed as a showcase of the Interaction SDK inside the Presence Platform of Meta Quest 2.

It is called “First Hand” because it has been roughly inspired by Oculus First Contact, the showcase demo for Oculus Touch controller released for the Rift CV1. Actually, it is just a very vague inspiration, because the experiences are totally different, and the only similarity is the presence of a cute robot.

The experience is open source, so developers wanting to implement hand-based interactions in their applications can literally copy-paste the source code from this sample.

Here follows my opinion on this application.

First Hand – Review as a user

The application

As a user, I’ve found First Hand a cute and relaxing experience. There is no plot, no challenge, nothing to worry about. I just had to use my bare hand and interact with objects in a natural and satisfying way. The only problem for me is that it was really short: in 10 minutes, it was already finished.

I expected a clone of the Oculus First Contact experience that made me discover the wonders of Oculus Touch controllers, but actually, it was a totally different experience. The only similarities are the presence of a cute little robot and of some targets to shoot. This makes sense considering that controllers shine with different kinds of interactions than the bare hands, so the applications must be different. Anyway, the purpose and the positive mood were similar, and this is enough to justify the similar name.

As I’ve said, in First Hand there is not a real plot. You find yourself in a clock tower, and a package gets delivered to you. Inside there are some gloves (which are a mix between Alyx‘s and Thanos’s ones) which you can assemble and then use to play a minigame with a robot. Over.

The interactions

What is important here is not the plot, but the interactions that you perform in the application in a natural way using just your bare hands. And some of them are really cool, like for instance:

- Squeezing a rock you have in your hand and break it

- Scrolling a floating display by just swiping your finger on it, something that made me feel a bit like Tom Cruise in Minority Report

- Aiming and shooting using your palm

- Activating a shield by taking your fists close to your face, as a boxing athlete in defense position

- Force-grabbing objects around by opening the palm and then moving the hand backwards, like in Half-Life: Alyx

There were many other interactions, with some of them being simple but very natural and well made. What is incredible about this demo is that all the interactions are very polished, and designed so that to be ideal for the user.

For instance, pressing buttons just requires tapping on them with the index finger, but the detection is very reliable, and the button never gets trespassed by the fingertips, resulting very well-made and realistic.

The shooting with the palm is very unreliable because you can’t take good aim with your palm, so the palm shoots a laser for a few seconds, giving you time to adjust its aim until you shoot the object you want to destroy.

And force-grabbing is smart-enough to work on a “cone of vision” centered on your palm. When you stretch your palm, the system detects what is the closest object in the area you are aiming at with your hand, and automatically shows you a curved line that goes from your palm to it. This is smart for two reasons:

- It doesn’t require you to aim exactly at the object you want to grab, because aiming with the palm is unreliable. It uses “a cone of vision” from your palm, and then selects whatever object is inside it.

- It shows you a preview of the object you may grabbing, so if the above system selected the wrong one, you can change it by moving your hand before doing the gesture that attracts it.

All the interactions are polished so that to cope with the unreliability of hand tracking. I loved how things were designed, and I’m sure that Meta’s UX designers made very experiments before arriving at this final version. I think the power of this experience as a showcase is given by this polish level, so that developers and designers can take inspiration from this to create their own experiences.

My impressions

As I’ve told you, as a user I found the experience cute and relaxing. But at the same time, I was quite frustrated by hands tracking. I used the experience both with artificial and natural light, and in both scenarios, I had issues with the tracking of my hands, with my hands losing tracking a lot of times. It’s very strange, because I found tracking more unreliable than in other experiences like Hand Physics Lab. This made my experience a bit frustrating, because for instance, when I went to grab the first object to take the remote and activate the elevator, lots of times my virtual hand froze when I was close to it, and it took me many tries before being able to actually grab it.

I also noticed more how hand tracking can be imprecise: Meta did a great job in masking its problems by creating a smart UX, but it is true that these smart tricks described above were necessary because hand tracking is still unreliable. Furthermore, not having triggers to press, you have to invent other kinds of interactions to activate objects, which may be more complex. Controllers are much more precise than hands, and so for instance shooting would have been much easier with the controllers, exactly as force grab.

This showed me that hand tracking is not very reliable and can’t be the primary controlling method for most VR experiences, yet.

But at the same time, when things worked, performing actions with the hands felt much better. I don’t know how to describe it: it is like when using controllers, my brain knows that I have a tool in my hands, while just using my bare hands, it feels more that it’s truly me making that virtual action. After I assembled the “Thanos” gloves, I watched my hands with them on, and I had this weird sensation that those gloves were on my real hands. It never happened to me with controllers. Other interactions were satisfying to me, like for instance scrolling the “Minority Report” screen, or force grabbing. Force Grabbing is really well implemented here, and it felt very fun to do. All of this showed me that hand tracking may be unreliable, but when it works, it gives you a totally different level of immersion than controllers. For sure in the future hand tracking will be very important for XR experiences, especially the ones that don’t require you to have tools in your hands (e.g. a gun in FPS games).

Since the experience is just 10 minutes long, I suggest everyone give it a try on App Lab, so that to understand better these sensations I am talking about.

First Hand – Review as a developer

The Unity project

The Unity project of First Hand is available inside this GitHub repo. If you are an Unreal Engine guy, well… I’m sorry for you.

The project is very well organized inside folders, and also the scene has a very tidy tree of gameobjects. This is clearly a project made with production quality, something that doesn’t surprise me because this is a common characteristic of all samples released by Meta.

Since the Interaction SDK can be quite tricky, my suggestion is to read its documentation first, and only after check out the sample. Or to have a look at the sample while reading the documentation. Because there are some parts that just by looking at the sample are not easily understandable.

Since the demo is full of many different interactions, it is possible in the sample to have the source code of all of them, and this is very precious if you, as a developer, want to create a hand-tracked application. You just copy a few prefabs, and you can use them in your experience. Kudos to Meta for having made this material available to the community.

Interaction SDK

I’ve not used the Interaction SDK in one of my projects yet, so this sample was a good occasion for me to see how easy it is to implement it.

My impression is that the Interaction SDK is very modular and very powerful, but also not that easy to be employed. For instance, if you want to generate a cube every time the user does a thumb up with any of his hands, you have to:

- Use a script to detect if the user has only the thumb up and the other fingers down for the left hand

- Use another script to detect if the user has the left hand with the thumb facing the up direction

- Use another script to check if both the conditions above are true

- Use another script to launch an event if the previous script detected a true condition

- Replicate all of the above also for the right hand

- Write the logic to generate the cube if the event is thrown

As you can see, the structure is very tidy and modular, but it is also quite heavy. Creating a UI button that when you point-and-click with the controllers generates the cube is much easier. And talking about buttons, to have those fancy buttons you can poke with your fingertip, you require various scripts and various child gameobjects, too. The structure is very flexible and customizable, but it seems also quite complicated to master.

For this reason, it is very good that there is a sample that gives developer prefabs ready out-of-the-box that could be copied and pasted, because I guess that developing all of this from scratch can be quite frustrating.

The cross-platform dilemma

The Interaction SDK, which is part of the Presence Platform of the Meta Quest is interesting. But sometimes, I wonder: what is the point of having such a detailed Oculus/Meta SDK, if it works on only one platform? What if someone wants to do a cross-platform experience?

I mean, if I base all my experience on these Oculus classes, and this fantastic SDK with fantastic samples… how can I port all of this to Pico? Most likely, I can not. So I have to re-write all the application, maybe managing the two versions in two different branches of a Git repository, with all the management hell that comes out of it.

This is why I usually am not that excited about the updates on the Oculus SDK: I want my applications to be cross-platform and work on the Quest, Pico, and Vive Focus, and committing only to one SDK is a problem. For this reason, I’m a big fan of Unity.XR and the Unity XR Interaction Toolkit, even if these tools are much rougher and unriper than Oculus SDK or even the old Steam Unity plugin.

Probably OpenXR may save us, and let us develop something with Oculus SDK and make it run on Pico 4, too. But for now, OpenXR implementation is not very polished in Unity, yet, so developers have still to choose if going for only one platform with a very powerful SDK, or going cross-platform with rougher tools… or using 3rd-parties plugins, like for instance AutoHand, which works well in giving physics-based interactions in Unity. I hope the situation will improve in the future.

I hope you liked this deep dive in First Hand, and if it is the case, please subscribe to my newsletter and share this post on your social media channels!

(Header image by Meta)

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.