Hands-on Distance Technologies: promising glasses-free AR, at an early stage

When I was at AWE, one of the demos I was the most curious about was the one of Distance Technologies, the company that promises to offer glasses-free augmented reality. I was lucky enough to be one of the few who managed to try it in a private showcase in a hotel room close to the convention center, and I’m happy to tell about my experience to all of you who could not be there.

Distance Technologies

Distance Technologies is a startup that came out of stealth a few weeks ago, promising “glasses-free mixed reality”. This company claimed in its first press release to be able to “transform any transparent surface into a window for extended reality with a computer-generated 3D light field that mixes with the real world, offering flawless per-pixel depth and covering the entire field of view for maximum immersion”. This could be useful in many sectors, starting of course from automotive and aerospace: smart windshields are something many companies are investing in because they will be in the vehicles of the future.

The premises were very interesting, and two things made them believable to me. The first one is that this startup came to light revealing that it had raised $2.7M from a group of investors including FOV Ventures and Maki.VC. If someone invested such money in it, it should mean that the company has something interesting in hand (the Theranos cases proved that’s not always the case, but… usually there is some due diligence made by investors, especially in Europe). The second one is that the founders are Urho Konttori and Jussi Mäkinen, who are two of the co-founders of Varjo, one of the leading manufacturers of enterprise PC VR headsets. I personally know Urho and he’s not only a very nice guy, but he’s reliable and he’s technically knowledgeable, so if he’s promising something, I’m confident he can deliver something good (Urho, after these many nice words about you, I expect at least you offer me some lunch next time I’ll be in Helsinki :P).

Distance Technologies Prototype

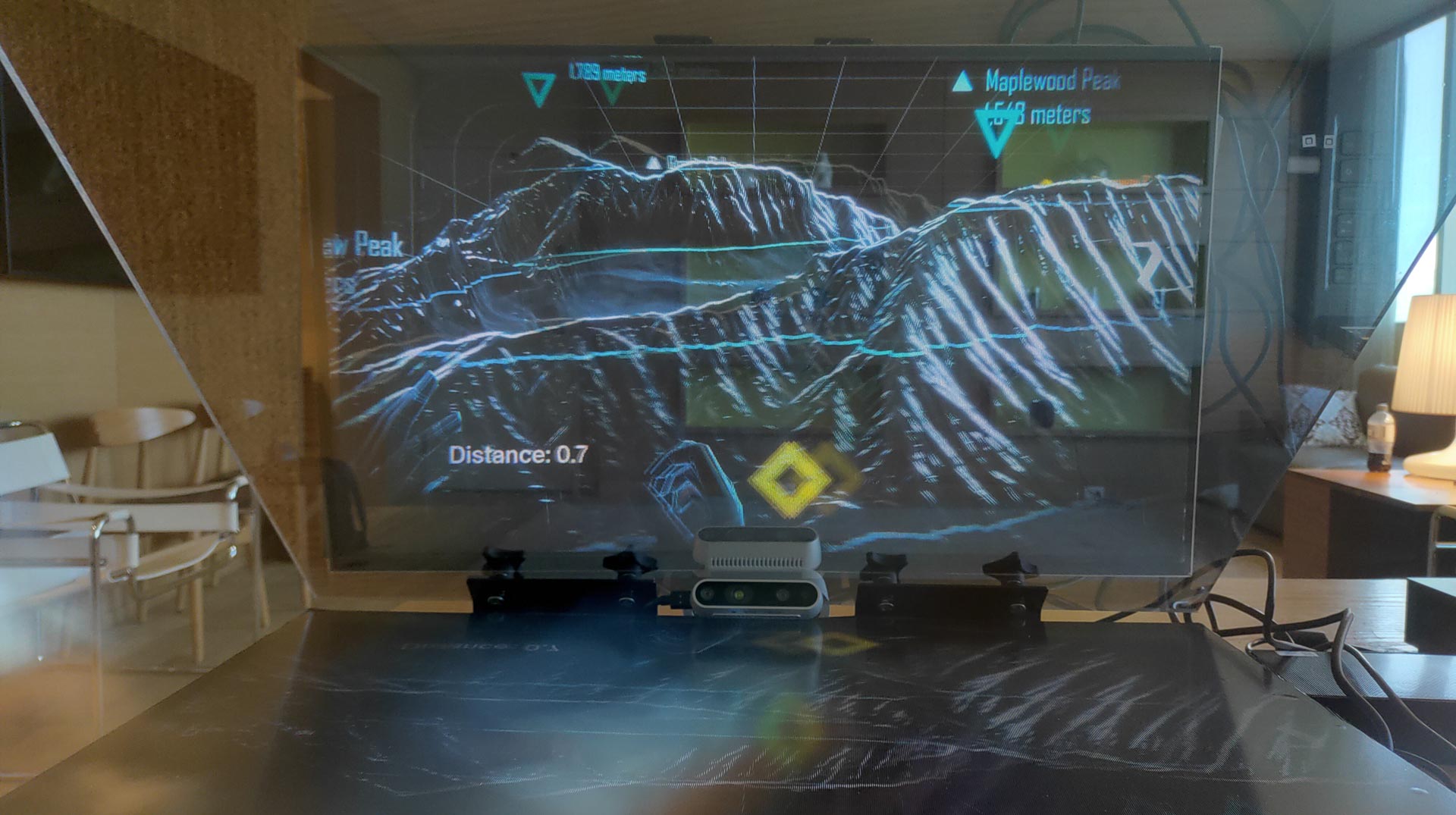

When I arrived in the room of the demo, I could finally see the prototype of Distance Technologies: there was a planar surface on the desk and a glass inclined by 45 degrees on top of it. In the edge between the two surfaces, there was a Realsense sensor.

In front of this setup, there was a Leap Motion Controller 2 sensor. Around it, there were various computers, which were the computational units used to render the visuals on the device. There was also a Kinect For Azure in the room, but I’ve been told that it was there for some specific demos involving avateering, and it was not part of the setup of the prototype.

The idea is that the computational unit of the device creates the elements to render and sends them to the “planar surface”, which projects them on the inclined glass. Being the glass skewed by 45 degrees, it reflects the light rays into your eyes, and since the glass is transparent, your eyes can see both the visuals of the real world that you have in front of you and the visual elements sent by the system, creating some sort of augmented reality.

But the visuals that the system creates are not just some 2D writings like in some smart windshields, but they are supposed to be lightfields, so realistic 3D elements with true depth. This is the specialty of Distance Technologies and this is where the Intel Realsense enters into play: the system can detect where your head is (and so your eyes are) and create the lightfield that is optimized for your point of view. It’s a bit like a very advanced AR version of the Nintendo 3DS: the system detects where you are, and tries to send to your eyes the light rays in a way that from your point of view, you can see the image of the virtual elements in 3D. The Leap Motion controller is meant to interact with the system in a natural way using your hands, but I guess it is optional in the setup and you can interact with it with whatever peripheral you want.

Hands-on Distance Technologies

I sat down in front of the device, and I started seeing the augmentations. The system was showing me some simulated 3D maps of mountains, I guess to simulate the use case of showing a 3D map through the windshield of a car.

The visuals of the virtual elements were pretty crisp and the 3D effect believable. Lightfields are something more advanced than the Nintendo 3DS of the example above and actually try to reconstruct the rays that an object would emit and that would hit your eyes if that object was real. So the perception of depth that you have when you are experiencing a lightfield is very believable. It was pretty nice to be able to see 3D elements floating in front of me, beyond the transparent glasses, without the need to wear glasses. And as I’ve said, the quality of the visual elements and their 3D perception was great. Urho told me they have a technology that optimizes the perception of depth depending on where is your head (which is tracked by the Realsense), and I think this is noticeable.

But the system showed me all the problems of it being in a prototypical state: the visuals suffered from various artifacts, and moving my head, I could see the switch from one vantage point to the other, in a way similar to the Nintendo 3DS: the visuals start duplicating and then they snapped to the new point of view. From some points of view, I could see duplicated elements, from others some halos, in others the depth of the object looked a bit “compressed” (the latest one especially if I tried to move my head up or down with regard to a normal height of a seated person). Then if I put my head too close to the system, my eyes started to cross, so I had to move my head a bit distant from the device to avoid this effect. At the end of the 5-10-minute demo, I was already suffering from eye strain.

Besides all of this, the semitransparent glass where the visuals were projected was slightly dark, so it reduced a bit the brightness of the real-world elements I had in front of me. This is a well-known effect also in AR glasses that use the same “reflection trick”.

I reported all these issues to Urho and Jussi and they told me that they were aware of all of this. They made clear that this is the showcase of an early prototype they have been working on it for just a few months (which in the hardware world, is like having started working on something yesterday), and that the finished system should have none of these shortcomings. They added that they did not want to wait years in stealth mode before showcasing what they have, but they wanted to be very open with the community and show all the progress since the early stages.

Final impressions

I am a tech guy, so I know what a “prototype” is: it’s something in very early stages that usually is full of problems, but that shows the potential of a certain technology. I personally think that Distance Technologies nailed this stage because it showed me something that yes, was incredibly buggy, but that made me understand the potential of what they are working on. Because in those moments that the system was working well, it was pretty cool to see these full-3D augmentations in front of me.

But now the team should go on and keep working on this project and try to go from this early prototype to an advanced prototype and then to a product demo. This is when we will see if this company can really fulfill its promise of glasses-free AR for transparent surfaces like windshields. For now, from my hands-on, I can say that the technology is promising, but it’s too early-stage to be used. I hope that one year from now, at the next AWE, I will be able to describe to you a system that is in the beta stage and that is closer to being used in some use cases. The team behind this company is great, so I want to be confident that this is going to happen.

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.