Leap Motion full speed ahead on mobile platforms, emulates touch with sound

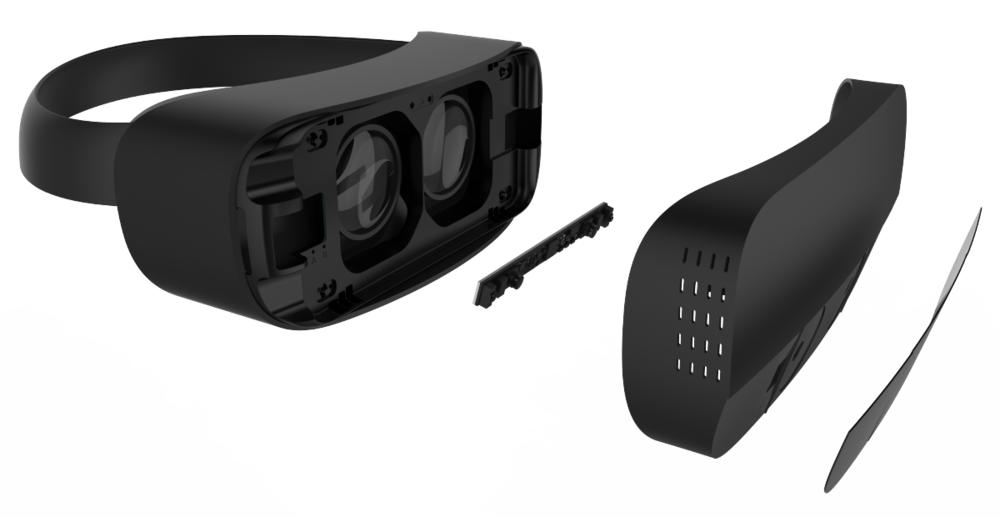

Yesterday I’ve published my post announcing some big news about Leap Motion. The most important of them is that Leap Motion is now completely focused in being embedded into standalone virtual reality headsets (particularly the ones implementing the Snapdragon 835 reference design) and no more in selling controllers. This will mean that its new and improved Leap Motion v2 will not be sold as a separate controller, but it will be only embedded inside standalone headsets.

Today I’ll give you some other info about Leap Motion and its vision of the future of VR. It will be interesting, especially the part talking about how Leap is trying to solve the fact it can’t provide any haptic feedack to the user.

As a reminder: these two posts serie has been possible thanks to an interview to the super-kind Alexander Colgan of Leap Motion, so these articles express the real point of view of the company.

VR is the killer application of Leap Motion

Leap Motion has always been an awesome controller and we all know it started as a PC peripheral. Some journalists said that “it was a solution looking for a problem”, since it was something really cool, but couldn’t find an ideal application for PC users (so no killer application). This hold true until they decided to mix Leap Motion with Virtual Reality: VR has proven to be the ideal field for it.

Alex said that they initially thought that Leap could be the new input mechanism for PCs, so that people started substituting mouse and keyboard with a more natural input mechanism like hands interaction, but this proved to work really bad, because:

- After 30 years of use of mouse and keyboard, it is very hard to tell people to change the input mechanism. Mice and keyboards are standard input mechanisms and all the programs are studied to be used with them and we are all accustomed to use them every day. Changing people’s habits is really hard, even if you invent something better and more innovative;

- Detecting depth on a flat 2D screen is difficult. I mean, if you ever played with some Leap Motion PC games, you know the hurdle of trying to detect the exact depth of the virtual hand inside the virtual world shown on your screen. Movement up and down are ok, but forward/backward movement of the hand is really hard to be mapped to the virtual hand depth position for our brain. Problem is: you’re trying to map a physical 3d movement onto a flat screen. Even simple applications like the ones with flowers or dancing robots were barely usable because of this.

To that, I would also add the fact that while mouse and keyboard make you hands stay at rest, Leap Motion requires you to stay with your arms lifted up, so it is more tiresome. This comfort is one of the reason of the success of the mouse and also one of the reasons why I think that headset remote controllers are cool.

After VR renaissance, Leap Motion started understanding the potentialities of using its device in conjunction with VR headsets: we could have our true hands in VR. Virtual Reality did not show the same problems of standard computing, since:

- In VR there weren’t (and still aren’t) standards, so it was possible to try to propose a new input mechanism for all the upcoming apps;

- In VR you have a 3D world, so you see your virtual hands exactly where you expect them to be, where your real hands are. Your brain has to map nothing, it just sees and uses hands;

- People should not learn how to use Leap Motion, since they would just need to use their hands like in real life;

So, while still supporting PC as a platform, they started investing a lot in VR. “VR interactions” was the problem for which Leap Motion was the solution. VR was the natural playground were Leap engineers could realize their vision of offering truly natural interactions. From this has born the Orion upgrade, that magically made possible true hands presence in VR.

This has required a lot of effort by engineers, since when you use Leap Motion in desktop mode, Leap Motion is fixed in position and just looks at the ceiling, which it usually sees as a flat super-dark background. When Leap Motion is mounted on a headset, it moves continuously around, so background continuously changes (and with it, also lighting conditions) and it is absolutely not guaranteed to be dark (since it points at our rooms, it can be all sort of things). If you’re in computer vision, you surely know the term “segmentation” and you know how this is hard (If you’re not in computer vision, well, basically I’m meaning how to separate the part of the image that represents the hand that Leap Motion is tracking from the useless background). Adding clutter and movement to the background makes tracking really harder to be performed.

Furthermore, hands in VR are seen from the behind, while on PC setups usually the sensor was able to see the palm. All this modifications required (and still require) a lot of efforts by Leap engineers, so a big investment has been performed for this VR-switch. But, given the results, I can say that it has been totally worth performing.

PC Leap Motion is still somehow there

There are still people using Leap Motion for desktop PCs applications. Leap Motion is surely one of the best hand trackers out there, so it is fundamental for all research projects involving hand detection / tracking / interactions. Even Kinect, while slowly dying, is still very popular among research centers… because this kind of affordable tracking devices are fundamental for researchers to make experiments.

Apart from research centers, there are some niches where it is popular, like artists, animators, designers, directors (that use it to manipulate camera frame in movies with a simple gesture), musicians (to shape sounds in 3 dimensions), etc…

Some popular apps on the Airspace Store are not VR ones. For example Geco MIDI is used by thousands of musicians in the world.

I’m wondering what will happen to these apps in the future, since Leap Motion will never be sold again as a standalone controller, so we won’t be able to use theseapps with our PCs anymore…

Leap Motion SDK is for all platforms and devices

Leap Motion has announced the new device, Leap Motion v2, so I wondered if this meant also a new SDK for this new device. The answer is “no”.

Leap SDK will continue to be always the same and it will continuously be improved to add new features and tools. The SDK will support both devices (v1 and v2) and all platforms (PC, mobile, standalone). I think that this cross-platformness is awesome and gives expert Leap developers the power to develop for multiple platforms using the tools they already know well.

SDK is highly optimized for mobile

Alex has stressed a lot that all Leap Motion SDKs and various tools (e.g. the Interaction Engine) are super-optimized for mobile. This is great for us developers, since makes possible for us to make cross-platform projects involving Leap Motion. We all know the hassle of porting a PC Virtual Reality project on mobile platforms, since a lot of optimizations have to be carried out on shaders, poly count and such, otherwise the FPS never arrives at 60FPS (and whatever you do, it never arrives to that threshold anyway, damn :D). Leap guarantees that all his assets, including Unity plugins, are optimized for mobile, so during such kind of PC-to-mobile porting, Leap Motion part is the one you have to care the least.

This has been possible thanks to the optimized LeapC APIs, which have been released on February 2016. What is LeapC? Well, accordingly to its official website:

LeapC is a C-style API for accessing tracking data from the Leap Motion service. You can use LeapC directly in a C program, but the library is primarily intended for creating bindings to higher-level languages. LeapC provides tracking data as simple structs without any convenience functions.

Alex has told me that LeapC has been so deeply optimized that its APIs are faster than native C++ Leap Motion APIs! And since almost all Leap SDKs, including Unity plugin, are based on LeapC, this means that they are super-optimized and super-fast too. And this optimization is crucial especially for mobile devices, since it means less resources consumption.

Some of the new tools have been conceived with the idea in mind of offering developers some tools optimized for mobile usage. For instance, the Graphic Render is useful to let developers have amazing UIs even on mobile headsets. The new UI widgets offered by Leap Motion are of an incredible graphical quality (possible thanks to the Graphic Render) and, thanks to their integration with the Interaction Engine, also offer an amazing natural interaction. If you have tried the first version of Leap Motion Widgets, you would notice an enormous difference in quality.

Mobile optimizations are an obvious consequence of the decision of Leap Motion to become a device completely dedicated to standalone mobile headsets. Alex told me that this interest for mobile platforms came because they noticed that mobile headsets were interesting because they offered a complete freedom from wires and external cameras, but they also had a big gap in quality experience from the PC headsets. They tried to solve this issue and this is how Leap Motion interest for mobile has started.

IR interferences highly reduced

IR interferences have been a big nuisance for Leap Motion users, especially in the beginning. The device seemed to find interferences everywhere, even when used in a desert cave.

Leap Motion is working hard on this and since the last 6 months, interferences affect a lot less the tracking quality. Thanks to tracking improvements and to the high def cameras on the v2 device, the interference issues are highly reduced. Alex says that some problems can still arise when there are big infrared sources in the room or when some lens flare of IR light happens on the controller (but this requires particular conditions of lighting angle) or when some IR light directly impacts on the user’s hands. “The biggest competitor of our technology is the sun” says Alex. Well, a a battle against the sun seems very hard to win…

In case of multiplayer use of Leap Motion, where two people wearing a Qualcomm headsets (with Leap Motion on) are directly facing each other, of course there could be issues. Engineers are at work to mitigate this problem.

Qualcomm reference design explanation

Since I cited Qualcomm headsets, I wanted to make a little explanation of what Qualcomm reference design means. Basically Qualcomm makes mobile processors, so it has released a document stating how to make a standalone headset employing Qualcomm chips or a mobile headset using smartphones that employ Qualcomm Standalone chipsets. This document says that you can take certain kind of AMOLED displays, sensors, processors (Snapdragon, of coruse), Leap Motion and that if you connect them in the proper way, they’re guaranteed to work well together. With “work well” I don’t only mean that they’re guaranteed to let the user experience virtual reality, but also that they guarantee a high quality result (high FPS, low battery usage, etc…).

This is a big advantage for OEMs wanting to create their own VR headsets, since they don’t have to experiment, make quality testing and so on… they just read Qualcomm specifications, buy the required pieces, assemble them together and they’re guaranteed to have created an awesome VR product. This means saving a lot of time and money for OEMs, that basically have only to design the appearence and comfort of the final product.

Leap Motion aims at emulating haptic with sound

I had to ask Alex what’s Leap answer to current objection that people move to Leap Motion, that is that it’s not able at all to provide haptic feedback. I also added that currently the most used input devices for VR are controllers like Oculus Touch. He answered me on many levels:

- There’s not an ideal VR controller. VR controlling scheme should be chosen on the basis of the VR application to be experienced: voice, remote, controllers, Leap Motion are all valid choices. For example, in case of firing with a gun, like when playing Robo Recall, Oculus Touch are optimal, since they can somewhat emulate the shape and the trigger of a gun.

- VR controllers are far than optimal. As I’ve myself expressed in my Oculus Touch review, VR controllers don’t offer true hands presence. They are awesome, but it’s not like using your hands in real life. You have to learn how to use them (and I still make a lot of confusion between index and middle finger triggers when using Touch) and the emulated hand pose is not exactly equal to the one of your real counterpart. Input is mostly on/off, there are not intermediate states. About the haptic feedback they’re able to provide, it’s very rough, since it is based only on the plastic of the controller and some vibration. But this is far from the real haptic feedback: plastic has a shape that not necessarily map the physical shape of the virtual object you’re handling; furthermore you feel the controller in your hands even when in the virtual world your hand is empty and this is wrong. So, VR controllers offer haptic feedback, but this is not a realistic one at all. For better haptics, professional devices like Dexta Robotics exoskeletons are required, but these are expensive.

Alex says that they don’t believe controllers are the ultimate future of virtual reality, since “VR is more than this”.

Me playing with Oculus touch… for such usages they’re cool. For others, they’re not optimal… - Having true hand presence is better. Of course Alex can only say that his device is better than the others (at Immotionar I always said the same thing about my solution when compared to others!). The reason he adds is that with Leap Motion you have your true hand, you use it exactly as in the real world. So you don’t have to learn anything, you have just to use your hands in the virtual world as in the real world. Furthermore there’s not a on/off mechanism, you can bend your fingers at whatever angle you want, for instance. You just use a UI using the finger you prefer, as you would do with a touch panel in real life.

- Non-touching haptic devices may help. If you missed it, there’s a startup called Ultrahaptics that is working on a device offering haptic feedbacks at distance using ultrasound waves. The device has already been showcased in conjunction with Leap Motion and obtained good feedbacks. So, using Ultrahaptics with Leap Motion, we can obtain full hands tracking plus some form of haptics without wearing anything. Cool, isn’t it? Alex believes that these setups may be useful especially for enterprise use cases. For example a surgery intern may use it to learn how to operate on a virtual cadaver by using its free hand tracked with Leap Motion, with the body force feedback offered by Ultrahaptics device. I’d add that this solution helps a lot in all situations requiring an external haptic feedback (like me touching something), but can’t do much to emulate internal haptic feedback (like me holding something in my closed hand). As I’ve said: “there’s not an ideal VR controller”.

Ultrahaptics device close-up. All those cylinders should give your hands a sensation of touch even if you don’t touch anything (image by UltraHaptics) - Haptic feedbacks may be emulated with visuals and sounds. Sensing of things in VR is malleable. They have studied a lot on this. For example, when demoing their PlasmaVR demo (see below video), they noticed that people recoiled because of the visuals and sounds of the experience.

They understood that a “phantom sensation of touch” can be emulated with a studied combination of visual + critical sound feedbacks. For each user’s interaction with objects, you have to provide this kind of combined feedbacks to offer him/her a sensation in the brain similar to the one of touch. That’s why their Blocks demo is heavily sound-designed: it has 33 sounds that are combined together to enhance the sense of physicality.

So, this is not exactly has having the sense of haptics, but the brain is tricked enough that for most applications (especially UI stuff) this is good enough. They’re continuing studying on this topics, to understand further how to offer this sense of phantom touch.

These answers prove that the battle between Leap Motion and physical controllers is still live. We’ll see which one will be the preferred input method in five years. If you know me, you know that I’m an advocate of controller-less and natural VR, so you know where my bet goes on… (let me know yours in the comments!)

And that’s all for today, too. I hope that these two articles have been able to give you some insights on Leap Motion and even more in general on hands tracking and Virtual Reality. I really thank a lot Alex for the interview it has granted me, it has been one of the most interesting interviews of my life!

As always, if you found this article interesting, please share it with other VR enthusiasts by pressing these fancy buttons below and subscribe to my newsletter!

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.

This was a fun and wide-ranging conversation 😀

Thaaaaaaank you Alex! For me has really been an epic moment!

Thanks! For me has been really an epic hour! 😉

Hiya Tony! After reading both articles I have a strong doubt. Are you sure that they won’t be selling any standalone version of the controller from now on? I mean, although it would be great Leap Motion releases a standalone v2 controller, imo it makes sense that they at least continue selling the standalone v1 (because of all the actual uses you mentioned), and just let v2 and future versions coexist specifically for embedded devices and HMDs. Did you have any chance to confirm this with Alex?

From a technical standpoint I agree with you, but we’ll have to see if from a business standpoint what you propose is possible. I mean, for sure they won’t stop selling abruptly the v1, but I don’t know for how much time this will happen. Let’s ask @alexcolgan:disqus !