MatchXR: SeeTrue Technologies may have solved the calibration issue for eye-tracking

Yesterday I attended for my first time MatchXR, which is one of the best XR events in Nordic Europe. I’ve made many new friends and tried some interesting XR solutions and rest assured I’ll publish a few posts about it these days. Today I want to tell you my experience with one of these products I’ve tried at this event, a pretty interesting one because it solves a known problem in our space: SeeTrue Technologies.

SeeTrue Technologies

SeeTrue Technologies has been the first solution I tried at MatchXR. The company does eye tracking using IR LEDs to light up the eyes and IR Cameras to grab the images of the eyes from which an algorithm detects the orientation of the pupils. This is basically what everyone else is doing in the eye tracking space, including well-known companies like Tobii, so I candidly asked SeeTrue Technologies what they were offering more than the others to be relevant.

What they answered me is that their solution, which is made of custom hardware and software, needs only to be calibrated once. Calibration is one of the current pains of eye-tracking in XR: every time you need to use your eye-tracking solution, in case you want great accuracy, you have to re-calibrate it. This is because the calibration is made for the current position of the headset on your head: if it is even slightly different than the last time, the detected eye pose could be skewed. And the more the headset has a different pose than the last time you calibrated it, the more the detected eye direction will be wrong. There are ways through which this problem has been improved, for instance, there are some solutions that just require you to look at a single point to perform the calibration, but still, it has not been solved yet.

Meet SeeTrue Technologies, whose claim is that you calibrate only once and then you can use the eye-tracking solution forever. I tried the solution myself to verify the claim: I wore some glasses frames with eye-tracking embedded inside and calibrated them. I started looking around, and on the computer screen, I could see that there was a red point drawn on top of the camera feed from the glasses’ point of view, and this red dot was going exactly where I was looking. I had a quick demo, so I don’t have data about the accuracy in degrees, but let’s say that roughly the red dot was always where it was expected to be, so eye tracking was working correctly. At that point, I slightly moved the glasses, and I kept looking around, and I could still see the red dot where it was supposed to be. I decided to be pretty extreme, and heavily skewed the glasses on my nose, as if someone punched me in the face while I was wearing them, to the point that the eye tracking camera for my right eye was not seeing completely my eye anymore. And well, even in these conditions, the damn red dot was still going exactly where it was supposed to go. That was impressive and confirmed that the solution was exactly doing what the company was claiming: I had calibrated once, and then eye tracking was working no matter how I put the glasses on my face. I was pleasantly surprised about that: one of the big problems of eye tracking in XR has been solved.

I tried to ask the guy that was demoing the system how they managed to do that. He told me that of course, he couldn’t tell me everything about their secret sauce, but just to give me a hint, he told me that they are using a different way of tracking the eyes. While other companies basically use ML to detect the eye gaze direction using the image framed by the IR camera, SeeTrue Technologies tries to reconstruct a “digital twin of the eye”. The basic idea is to reconstruct how the eyes are made and so once this model has been built, how the glasses are worn becomes less relevant, because you just keep adapting the current read data to that unmutable model. This is a very vague explanation but was enough to start getting an idea about how things work.

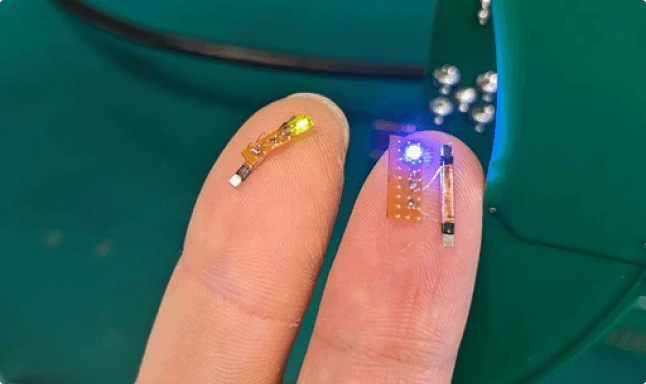

One other thing that I was shown was a little device to do eye tracking for microscopes. I’ve been told that there are already microscopes that integrate augmented reality and SeeTrue Technologies can add eye tracking to enhance the functionalities of these devices. The company has created two little circular mounts that integrate eye tracking and that can be added on top of the microscope so that they can track the eye of the doctor using it. But what are the possible applications? Well, one of them is to add a little interaction layer: the user can stare at specific elements of the UI of the microscope to activate them. Another one that is very useful is to create a heatmap of where the scientist is looking at. This is super-important for training because you can see if the medical student has looked at the right points of the image before making a diagnosis. SeeTrue claims that they are the first company to propose this kind of solution. Unluckily there was no microscope there to test it in action. But they showed me a video, that I’m pasting here below.

The last demo I’ve been provided was an AR demo using a modified version of XREAL Light glasses mounting SeeTrue Technologies eye tracking. I had to undergo a quite long 21-point calibration, and then I could play a little game in which I had to destroy all elements of a grid by looking at them, trying to look at them only when they become a + and avoiding looking at them when they become a -. XREAL glasses were not fitting my face very well so I wore them in a weird position, but even in those non-ideal conditions, the eye tracking was still somewhat working, having only some issues when I was looking down. So eye tracking in AR was working fairly well. I have to say that the only problem was in the demo itself: as I always say, eyes are made to explore and IMHO they shouldn’t be used for interaction unless it is really needed (e.g. for accessibility issues). The problem was that regardless of the fact that a block in the grid was becoming a + or a -, my eyes saw that something was changing in front of me, so they went there to let the brain analyze what was happening, so they went a lot of time looking at the – blocks, making me lose points (I still made the 2nd score, though).

The fact that the eyes are meant to explore makes also eye tracking demos dangerous if you are with your special one. I remember that while I was trying SeeTrue Technologies glasses, I wanted to test the accuracy of the system, so I said to the employee “Ok, now I look towards a specific letter of that poster… tell me where do you see the red dot in the camera feed now” and I started looking at a poster on a wall. But many people were passing by, including a blond woman, and I remember the guy telling me “Ok, you are looking at the wall, now the low part of the poster, now the woman with the white shirt, now the letter X on the poster…” I was a bit surprised: I just unconsciously looked in that direction for a split second, because eyes are meant to explore the surroundings, but still, the eye-tracking tech detected it. I was alone, so things went ok, but imagine if I was married and being there with my wife… with other eye tracking technologies, I could have said “No, my darling, it is not as it seems, it is because the calibration of the device is not working well”, but with SeeTrue I could not even have that excuse anymore!

Jokes apart, this is also why eye tracking data is so sensitive privacy-wise: it can detect many things you do unconsciously and so reveal things of you that you don’t want to be revealed.

Anyway, I left the booth of SeeTrue Technologies pretty satisfied. Of course, mine was a quick test of a few minutes, but I thought that what I saw (pun intended) was cool because it solves a well-known problem of eye-tracking technologies.

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.