Google creates an amazing technology to see the face in mixed reality

If you’re a VR enthusiast like me, surely in these two days your Twitter feed has been flooded by GIFs like this one:

If your question has been “what the heck is happening?”… well, I’m here to help you a bit.

It all started with Google sharing this article on its blog, followed by this super-interesting research paper (I suggest you to give it a read!) and some youtube videos like this one.

When reading all those articles, the first thing we did was looking at the calendar… and no, it was not April the 1st, so this news had to be real. So that faces behind the headset were real!

Again… what are we talking about? Ok ok, time has come for some explanations: Google and Daydream Labs (I mean, Google & again Google) have experimented a new technology to make mixed reality videos more cool than ever.

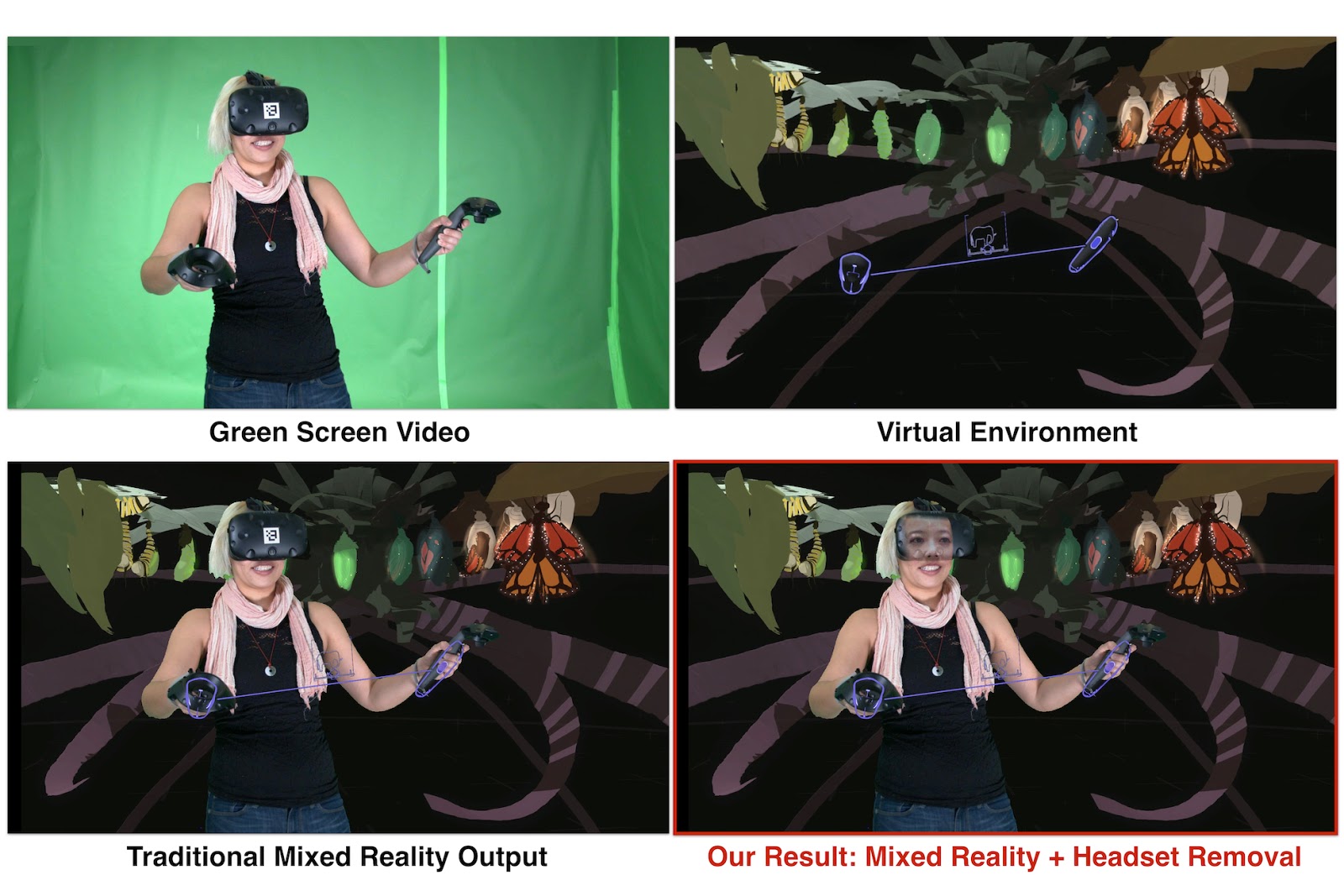

Since the guys of Fantastic Contraption had the genius idea to mix a green screen with a person playing with VR, mixed reality has always been the best way to show someone while playing with virtual reality: you just show the player from the real world and all the environment from the virtual world… and a fantastic result is assured.

I agree with the coolness of this kind of videos… and that’s why we made some mixed reality videos too, to showcase our Hit Motion game (even if ours was something like offline mixed reality, since we don’t showcase the game while the player actually plays it, but some computer graphics stuff that emulates it)

Guys at Google had an idea: why don’t we show the player’s face as he/she plays the game, in mixed reality?

This may seem a crazy idea, because you can’t see the user’s face, if it is covered by the headset during the recording of the video… but for every crazy idea there is some crazy engineer someway that wants to solve it in a crazy way… and Google people have been so crazy to do it.

So, how they did it? You can read the above research article to discover it, but if you want a tl;dr version…

- Using a special camera, they record the model of the user’s face, as he/she looks up, down, left, right with their eyes. This is essential to get not only the model of the face with different eyes pupils positions, but also the micro-movements of the face as the user looks in different directions;

- They put a pupil tracker inside the headset;

- As the user plays with the headset, the system gets current pupil position from the eye tracker and then try reconstructing the best possible face from that pupil position. Do you remember the step 1? Well, they take the model they made there and they use it to reconstruct the most probable face for that pupil position;

- Once you’ve the reconstruction of the face, you use some magic to glue it onto the video stream. That magic involves that marker that you see on the Vive headset in the video;

- Enjoy!

Results are super-interesting… I love this kind of researches!

But, since I’m a very bad person, I have always to ruin your happiness moments. My complete opinion about this is that… well, it’s only a research. First of all it requires a headset with eye-tracking inside and almost no-one has one at home (maybe the guys buying a FOVE). Then, as you notice, results can be pretty creepy. Faces do not look natural: the reason stays inside our brain: our brain, apart from procrastinating looking at cat GIFs, is super-talented in discovering anomalies in human images around us. This is one of the reasons why it is so difficult to make a good motion tracking animated character… or because we are good at spotting photoshopped people in photos. That reconstruction in step 3 is made interpolating some keyframe of the face… it’s actually a 3D reconstructed face, not a real face… that’s why we identify it as strange. Furthermore, they’ve not released publicly their software and when we showcase our results we are all very talented in showing only the best ones: if you have ever read some research papers, you know that…

Apart from that, surely they made a great job for this prototype and the idea behind all this is surely interesting! When eye-tracking will be present in each headset in the market (2018, maybe?) this tech, improved, can be useful not only to make cool mixed reality videos, but, more interestingly, to make VR social more human. For example you could make “VR skype calls” with your headset on and people could see a reconstructed version of your face, despite the fact that you wear a headset. The same holds for VR worlds. And I’m just beginning to think about the implications of mixing this with technologies like Kinect for full body virtual reality… we can really be ourselves with our body and our face in VR! Can’t wait for this to happen…

Nice job Google, nice job.

(Header Image by Google VR)

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.